Transcripted Summary

In a mobile-first world, it's really important to test our applications on the many devices that we support. However, automating cross-platform functional tests is such a pain.

You have to either write separate tests for each of the various configurations or account for these variations within your test code by using conditional statements.

Not only is it a pain to write these automated tests, it's also a pain to run them.

Managing your own device lab is no easy feat.

Fortunately, there are cloud providers to help manage this, but many companies are starting to wonder if cross-platform testing is even worth it. That's because most cross-platform bugs that are found are not functional bugs. In this day and age, browsers have gotten to a point where it's rare to find a feature that works on, say, Chrome but not Safari.

Today, it's the change in the viewport size where most cross-platform bugs lie.

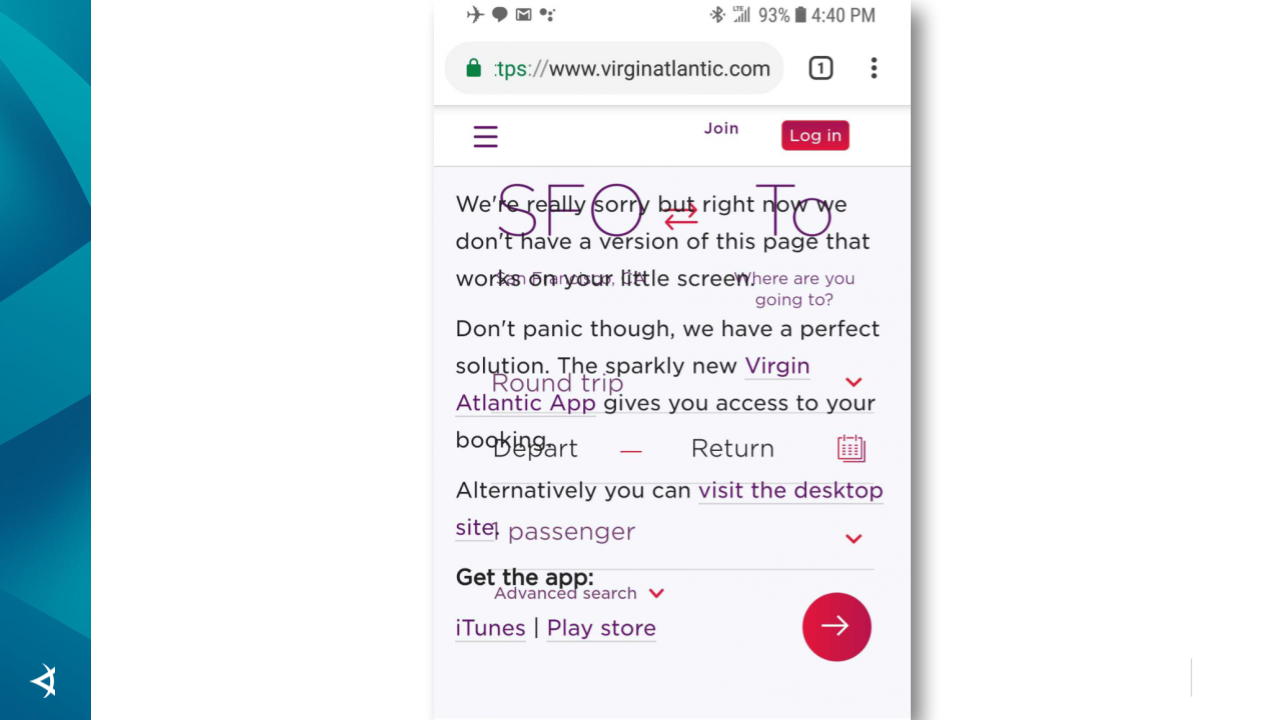

Here's a browser view of an airline's website on a mobile device.

Imagine the annoyance of trying to check on a flight while heading to the airport and receiving this.

Here's an app asking a user to activate their account.

We all know how annoying it is to have extra steps when signing up for an account, especially when having to do this in a mobile view, but it's multitudes more annoying when there are also bugs like this one, where you can't even see what you're typing.

The interesting thing about these examples is that our functional tests would never catch such errors.

In the airline example, our test code would verify that the expected text exists. Well, yeah, the text does exist, but so does other text as well, and it's all overlapping, and making it unreadable for the user. Yet this test would pass.

In the account activation example, the functional test would make sure the user can type in the activation code. Well, sure, yeah, they can, but there's clearly an issue here which would lead to signup abandonment. Yet, this test would pass.

Again, these are not functional bugs; these are visual bugs.

So even if your test automation runs cross-platform, you'll still miss these types of bugs if you don't incorporate visual validation.

Visual validation certainly solves the problem of catching the real cross-platform bugs and providing a return on your automation investment. However, is this alone the most efficient approach?

If the bugs that we're finding are visual ones, does it really make sense to automate all of these conditional cross-platform routes, and then execute functional steps across all of these viewports? Probably not.

UI tests tend to be fragile by nature. Running these types of tests multiple times, across various browsers and viewports, increases the chance for instability. For example, if, let's say due to infrastructure issues, your test failed 2% of the time on average. Then running those tests on 10 more configurations now increases the chances of flakiness tenfold.

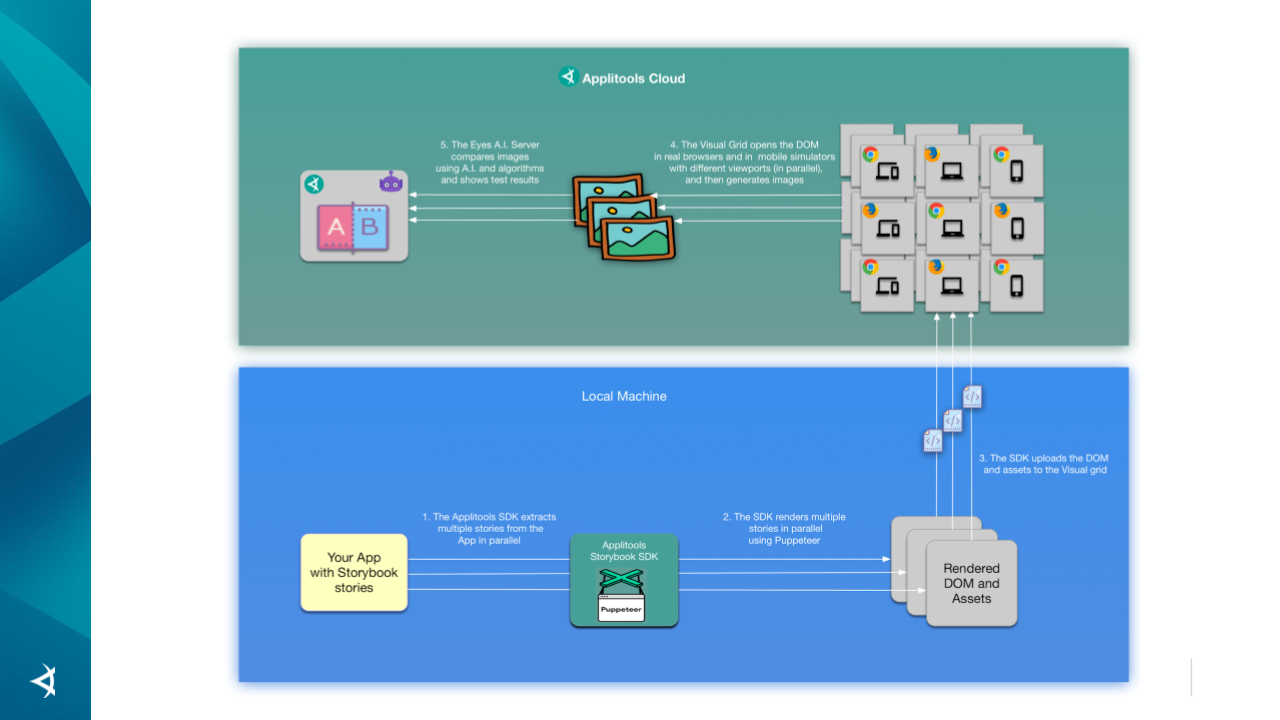

Fortunately, there's a new, more efficient way to do this via the Applitools Visual Grid.

The Visual Grid runs visual checks across all of the configurations that you like.

Run your test for one configuration, specify all of your other configurations, then insert your visual checks at the points where you'd like cross-platform checks executed. The Visual Grid will then extract the DOM, CSS, and all other pertinent assets, and render them across all of the configurations specified.

The visual checks are all done in parallel, making this super-fast.

As opposed to running all of the test apps across every configuration, you'd instead write and execute this test for one configuration and specify an array of configurations that you'd like Applitools to run against.

Within your scenario, you'd make calls to Applitools at any point where you need something visually validated.

Let's say at step 8 of your test is where you'd like to do a visual assertion. With the Visual Grid, there's no need to execute these 8 steps on all configurations. The Visual Grid will instead execute the steps on one setup, capture the current state of the app, and then render that state across all of the other specified configurations — essentially showing what this app looks like at this point and validating that everything is displayed the way it's intended for every configuration specified.

And because we are able to skip the irrelevant functional steps that are only executed to get the app in a given state, the parallel tests run in seconds, rather than in several minutes or even hours, making it perfect for continuous integration or continuous deployment where fast feedback is key.

Let's update our existing framework to show how to leverage the Visual Grid.

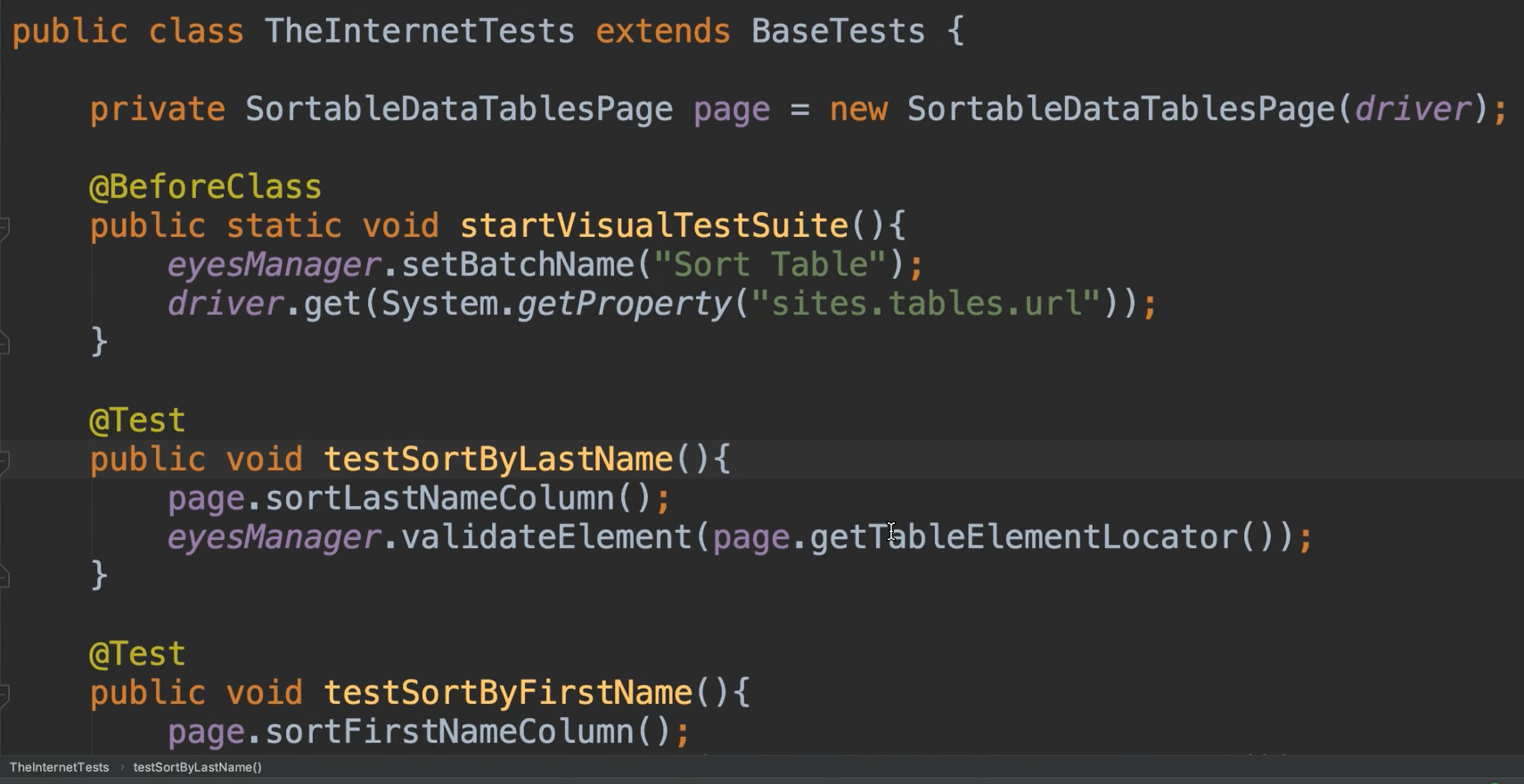

Okay, so we have 5 tests in this class that are looking at the table that we talked about in the last chapter.

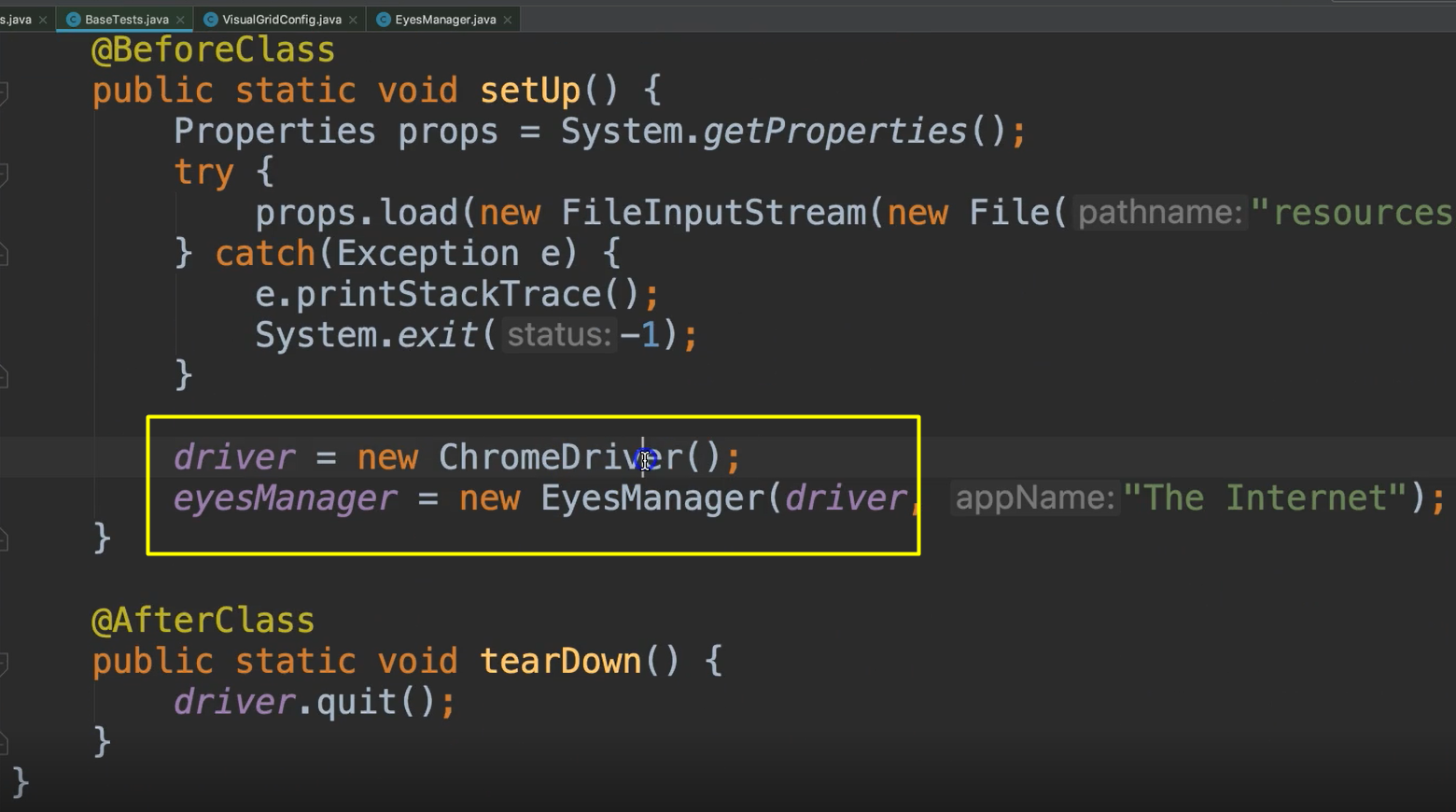

This is verifying the table after each of the sorts. If we were to run this, we would have 5 tests here, and they would all run on Chrome, because that's what we specify in our BaseTests.

Now I want to use the Visual Grid — I want to enhance this to run across multiple environments.

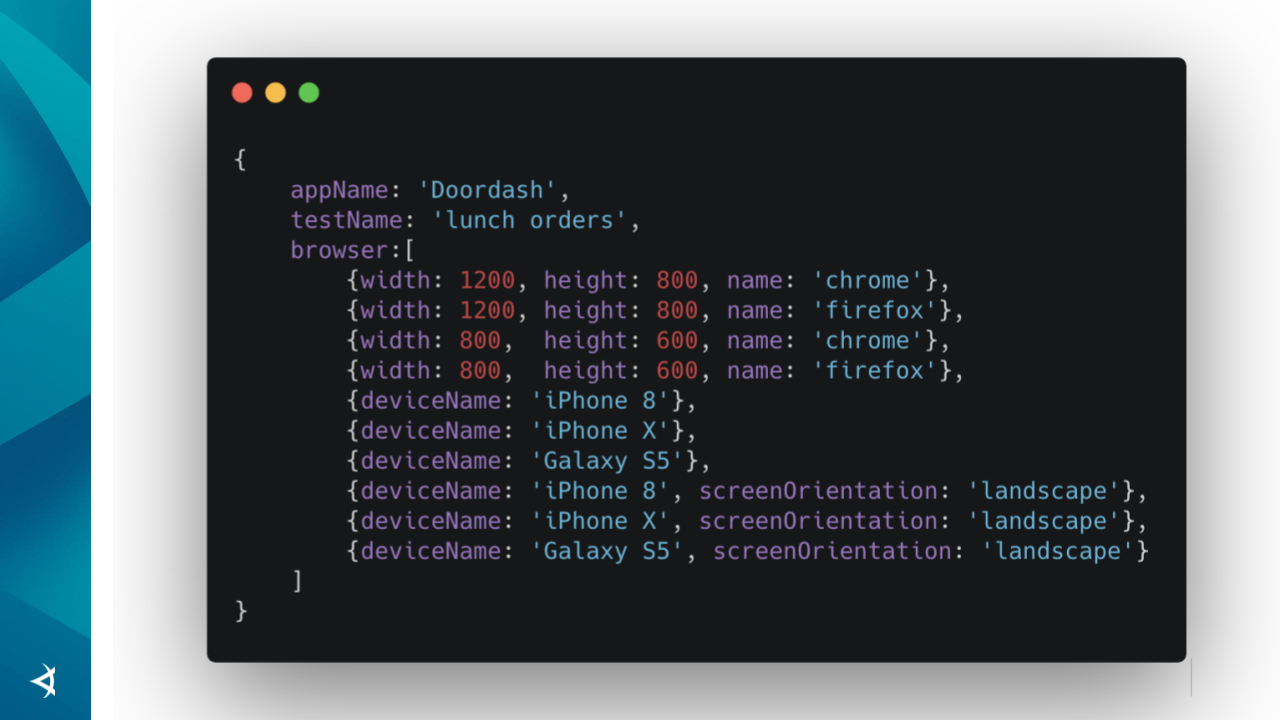

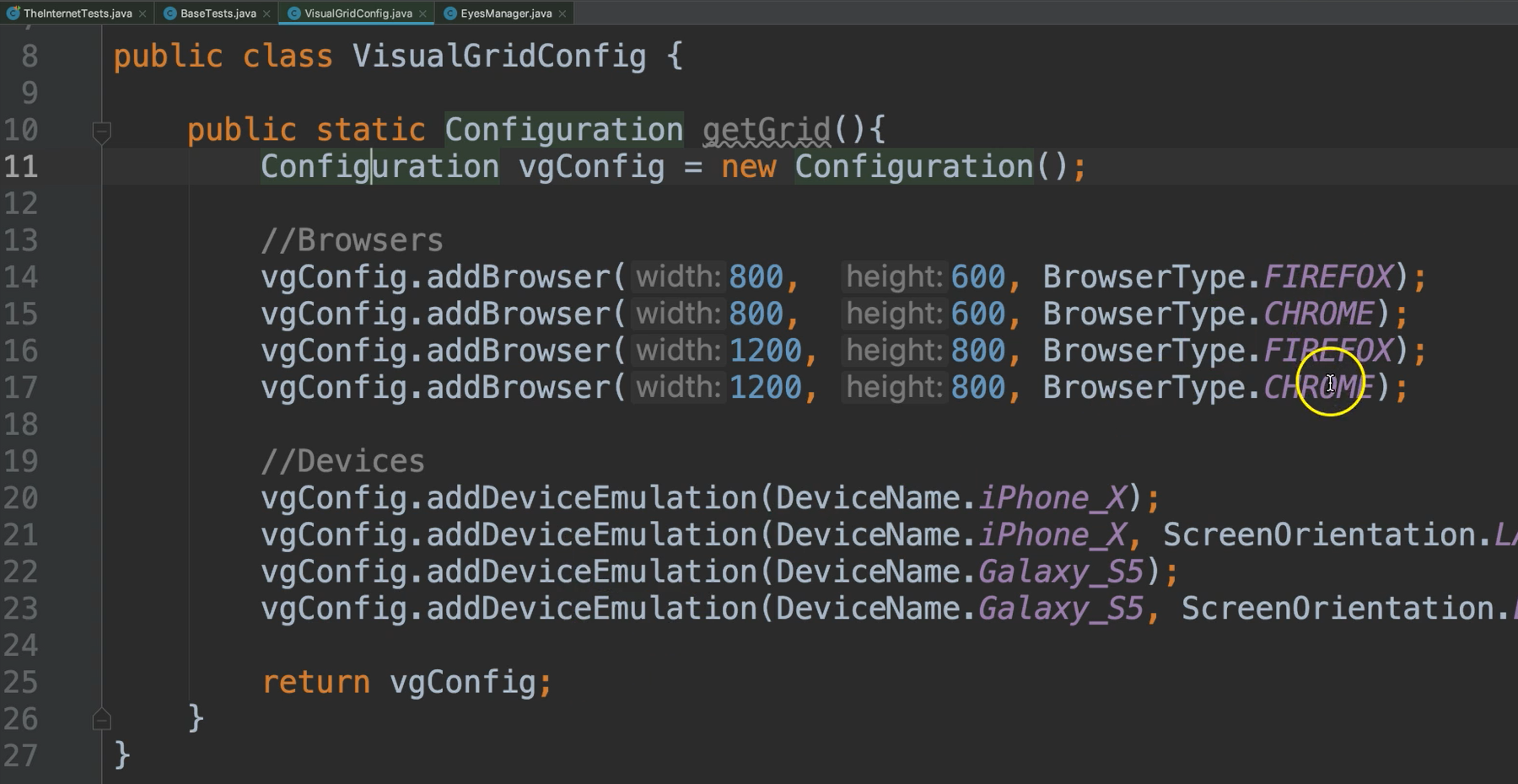

I created a new class here called the VisualGridConfig.

On line 11, I set up this Configuration object, which is part of Applitools Eyes.

And with this Configuration object, I specify the browsers and devices that I would like to run against.

So I have now specified that I want to run against Firefox and Chrome in various heights and widths.

And then also, I want to run against iPhone and Galaxy, in both landscape and portrait modes.

Now we want to send this configuration over to Applitools, to let them know that we want to use the Visual Grid.

Again, this is why we suggested things like having a wrapper class, so that I wouldn't have to go to each one of these tests and update them in order to use the Visual Grid.

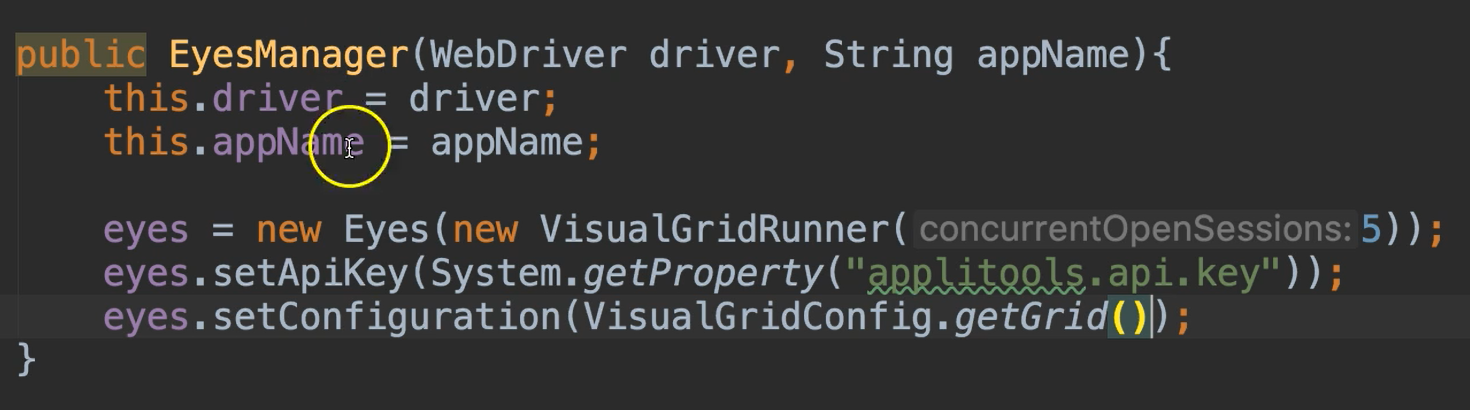

To use the Visual Grid, instead of calling the default constructor like we've done before, we would pass in a new parameter for a VisualGridRunner.

We'll just say

eyes = new Eyes(new VisualGridRunner(5))

The number passed in (5) is the number of concurrent executions that you'd like to occur, and this of course is tied to the number that you have allowed in your plan. So, if you are allowed to do 5 consecutive runs, then you wouldn't put anything more than 5 there.

Let's go ahead and put 5, and this will allow at least 5 of them to run in parallel.

Okay, now once we've done that, we've set our API key, and then we're going to set a configuration.

We'll say — eyes.setConfiguration() — and pass in the configuration object that was defined in the VisualGridConfig class.

Let's go ahead and just make a call to this, so we'll say —VisualGridConfig.getGrid().

Keeping Code Clean

Now, I could have done all of this configuration stuff inside of the EyesManager wrapper class itself, but I wanted to keep this separate, since it is a good amount of code. So I've just separated that out into its own class VisualGridConfig.

Okay, so I'm going to go ahead and run TheInternetTests again.

Before I only had 5 tests, and now I'm running it with the grid. Now, notice that it still only opens on Chrome.

But behind the scenes, it's capturing this DOM, and all of its resources, and then sending it out to the Applitools cloud, and then running them in parallel across all of the configurations that we've specified.

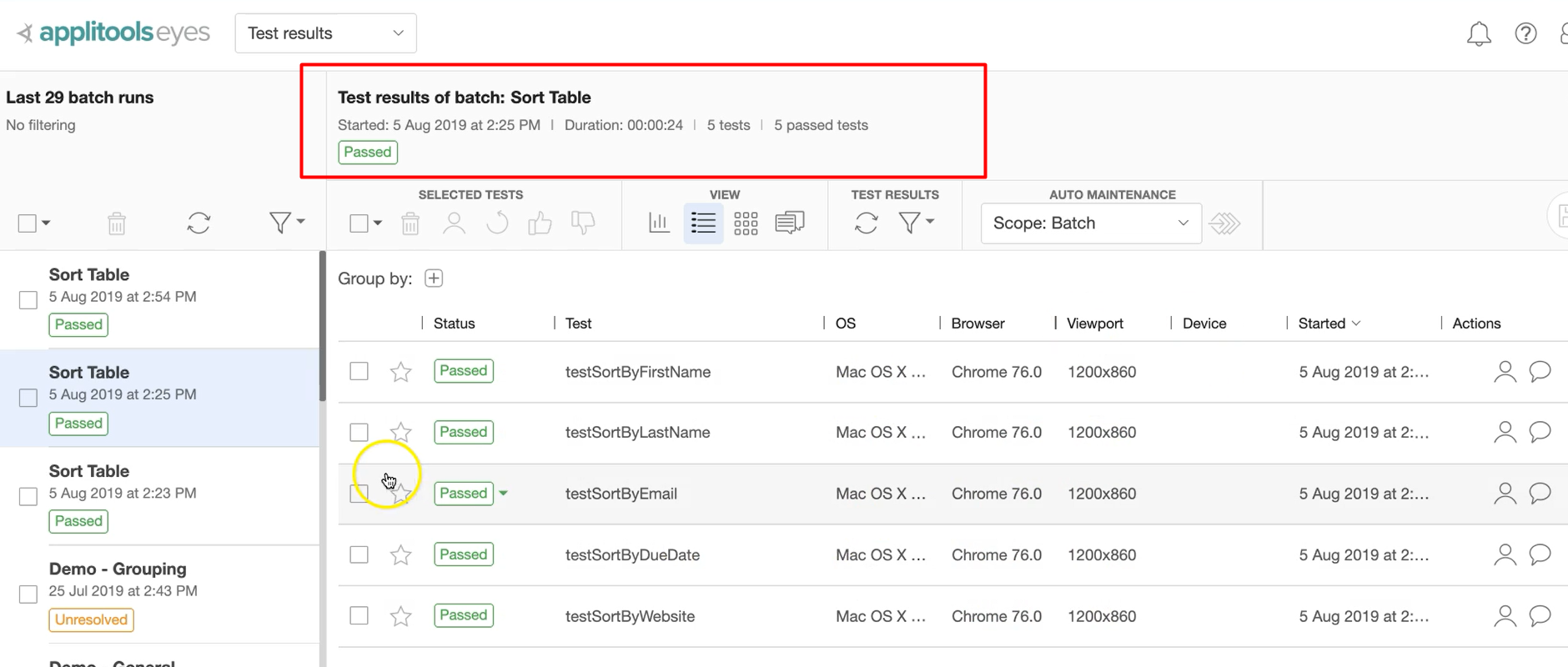

Okay, so we see that it says that each of the tests have passed.

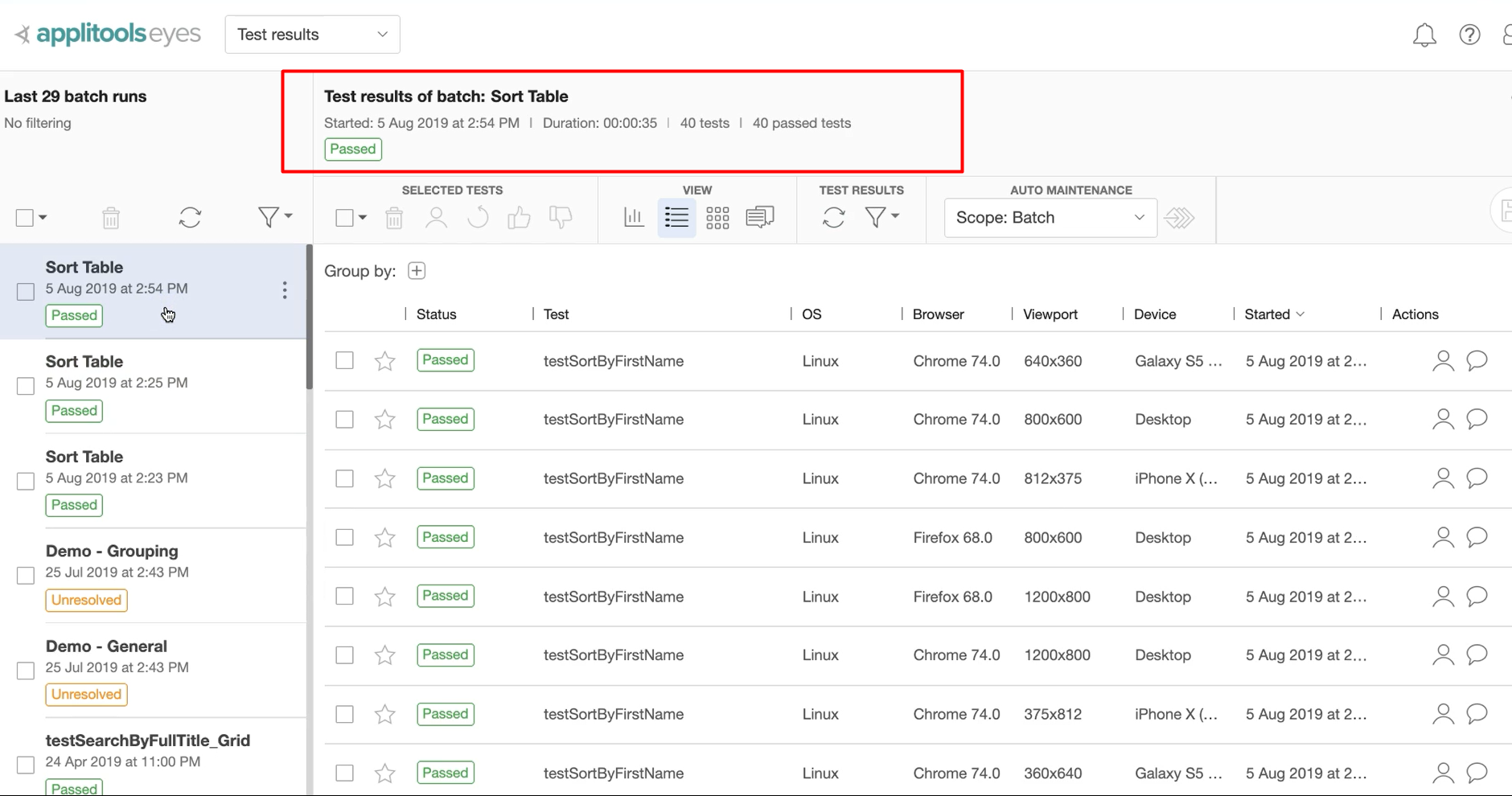

Let's go to the Applitools dashboard to look at these tests.

We see on our first run, we only had the 5 tests that were run, and they were all run against Chrome in the same viewport.

When I do the Visual Grid, now I have 40 tests that have been run now, and these are all specified on different configurations, so we have the Galaxy, and the desktop, and the iPhone, and desktop Chrome, and desktop on Firefox, etc.

We see that we have a bunch of tests here, and we didn't have to download any additional devices or browsers. Everything is happening in the cloud, and it's happening pretty fast. So, this is a pretty cool and innovative way to do cross-platform testing.

If we look at the first batch, this one took 24 seconds to run 5 tests on just Chrome - versus we've run those same 5 tests across 8 different platforms, and it only took 35 seconds. So, 11 seconds more, and we've dramatically increased our coverage.

Are there any drawbacks to this?

Again, these are visual checks, not functional ones. So if your app's functionality varies across different configurations, then it's still wise to actually execute that functionality across those said configurations.

Also, the Visual Grid runs across emulators, not real devices. So this makes sense when you're interested in testing how something looks on a given viewport. However, if you're testing something that is specific to the device itself, such as native gestures, pinching, swiping, et cetera, then an actual device would be more suitable.

With that being said, the Visual Grid runs on the exact same browsers that are used on the real devices, so your tests will be executed with the correct size, user agent, and pixel density.