Transcript

What is Docker?

Before we can get down to running tests and looking at code, we need to talk about Docker and the buzzword - containers. So what is Docker?

Docker is designed to build, ship, and run business critical applications in production at scale.

You can build and share containers and automate the development pipeline from a single environment. Each of these containers represents a piece of the app or system.

Awesome! Let's talk about how Docker makes all of this work at a high-level though, because it may still not be clear.

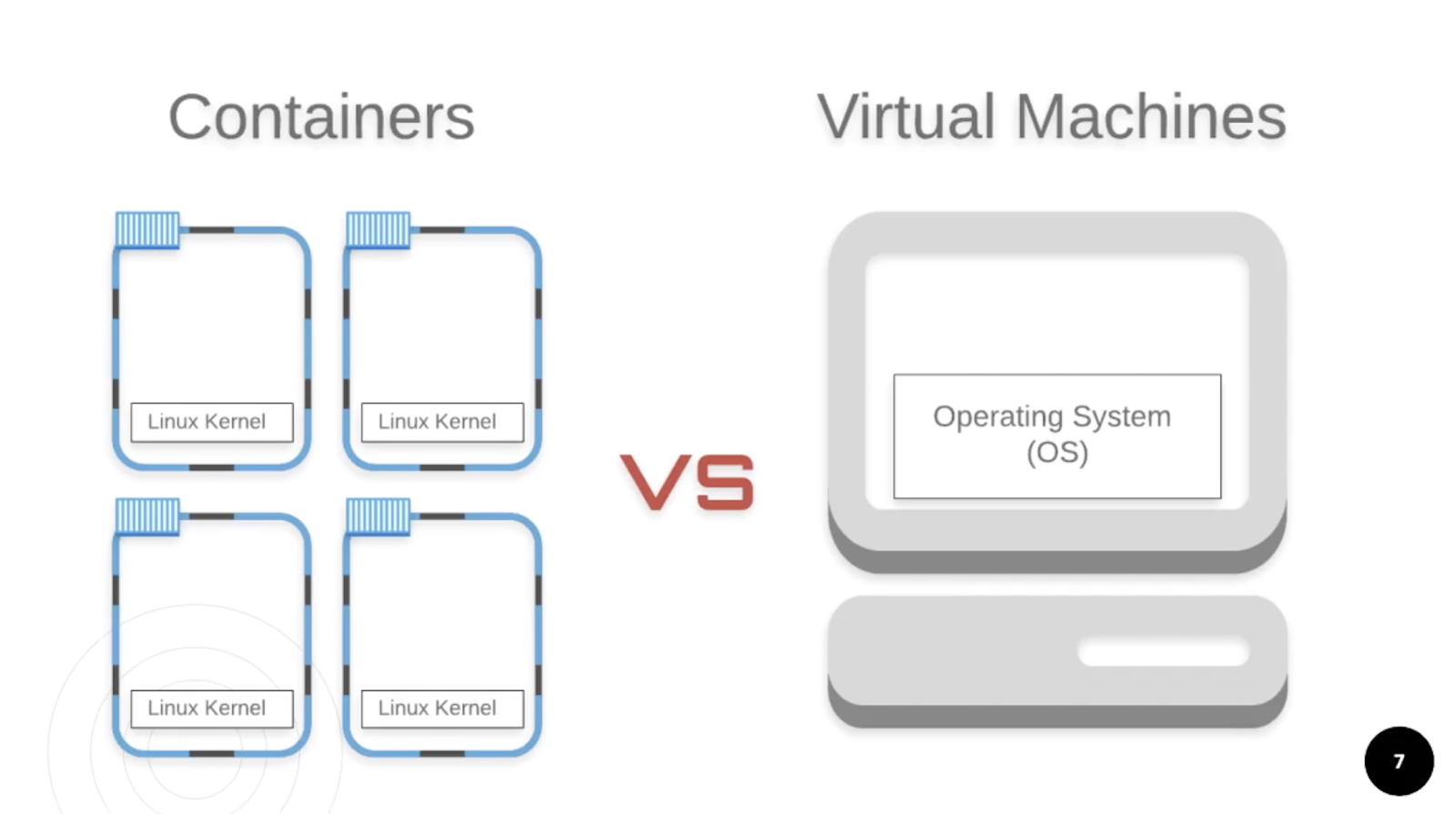

Containers are the building blocks of the containerization world and allow you to package only the things you need for your application or service without creating a whole operating system, like Virtual Machines. This means they are faster and more lightweight. Containers also give you control over your environment because you can specify exactly what you want each container to look like by using blueprints called Images.

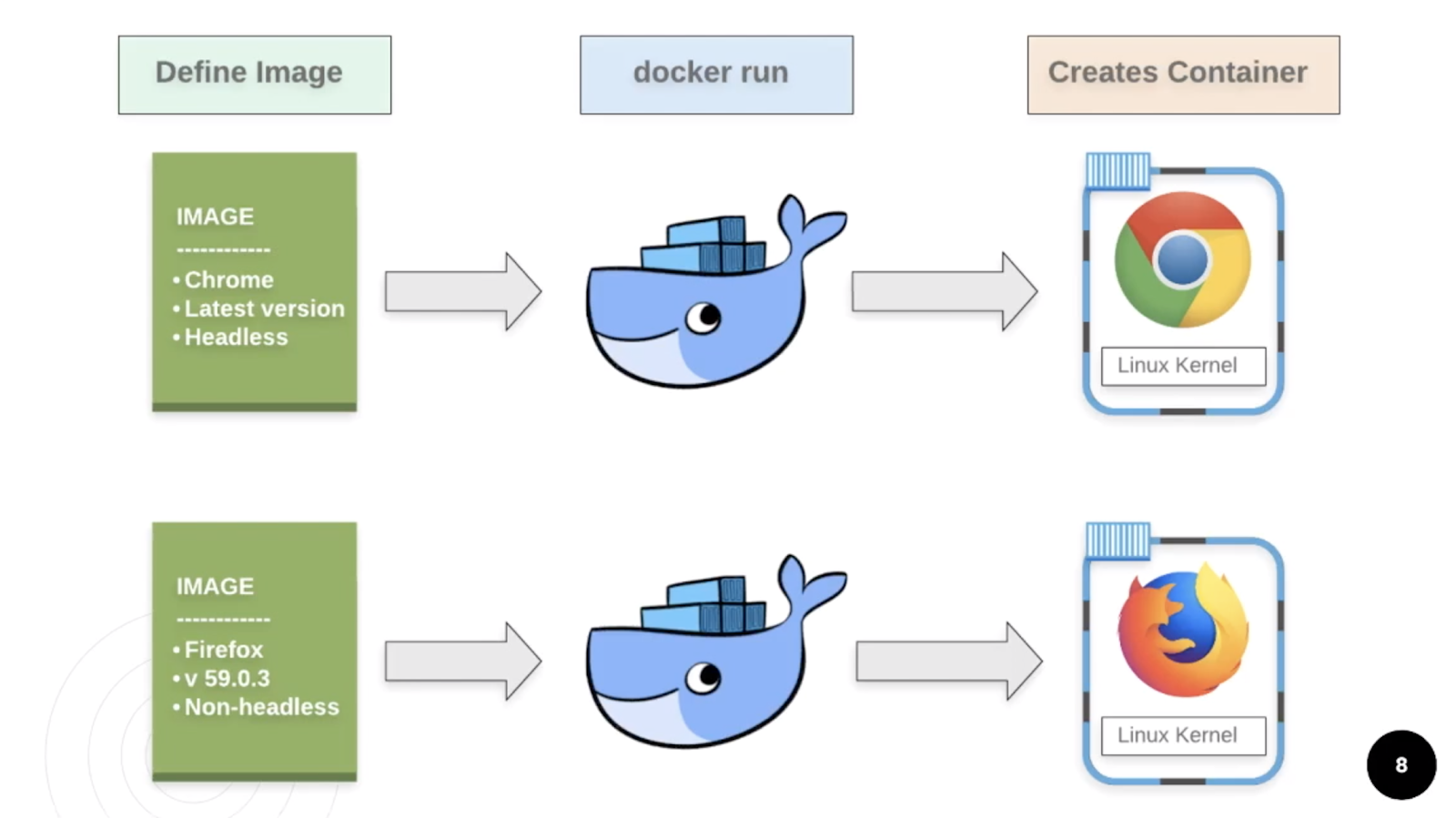

Images are the specs or details used to create containers. Just like how images for VMs let you spin up a Windows instance of Vista or XP, or Linux images let you pick between Ubuntu, Debian, or other versions, images for Docker let you specify which services, libraries, or dependencies you need for a container.

To make this even easier to understand, you can think of Images as a very detailed shopping list.

If our app was a Peanut Butter and Jelly Sandwich, we would have 3 main ingredients:

- Peanut Butter

- Jelly

- And bread

If we had to make this sandwich manually, it would be up to author of this poetic, beautiful sandwich to decide where they get these ingredients and the brands and types they want. Because of this, it would be very easy for two authors to have drastically different sandwiches! I mean, I always get the wrong brand whenever my wife has me go to the store to get something like tomato sauce or green beans.

Images would solve this by having a very detailed specification for each of these ingredients. There would be no way for me to get the wrong brand or flavor if I knew exactly what to get before even leaving the house!

Without Docker

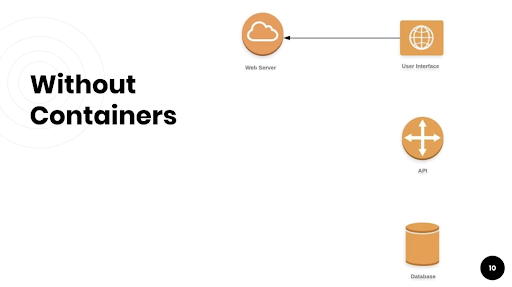

If we look at the structure of this very simple web application, there are four pieces that make up our entire app: The database that stores our information, the API layer to communicate between the UI and the database, the front-end code that is the UI, and the web server to host the UI and APIs.

Without containers, organizations would have to manage each piece of these very manually! This is a super simple example for just one environment, but already we have to track:

- Which version of the front-end code are we on

- Which dependencies are required and what are their versions

- Networking the services so they are connected correctly

- Managing services as each one changes

- And the list goes on…

This is why many companies struggle when trying to implement their own CI/CD, but I'm sure these issues may sound very familiar to you. Cue the sad music because I imagine some of these will hit close to home.

Have you or a loved one ever been a victim of:

- Developers tripping over each other because they are sharing the same Dev or QA environment?

- Developers are put into a "queue" because they must wait for Developer A to be done with that test environment or instance of a service?

- The bugs you find are caused by "the wrong version" of a service or feature flag

- The bugs you find are "expected" because of your test environment

And everyone has grown used to these issues and some are even satisfied if more than 50% of the tests pass. I wish I was joking, but these are very real scenarios for many people.

There are many more, but these problems stem from the same issue. We do a poor job of controlling our environments, services, and data because of how manual everything is. Docker and containers do a great job of solving this.

With Docker

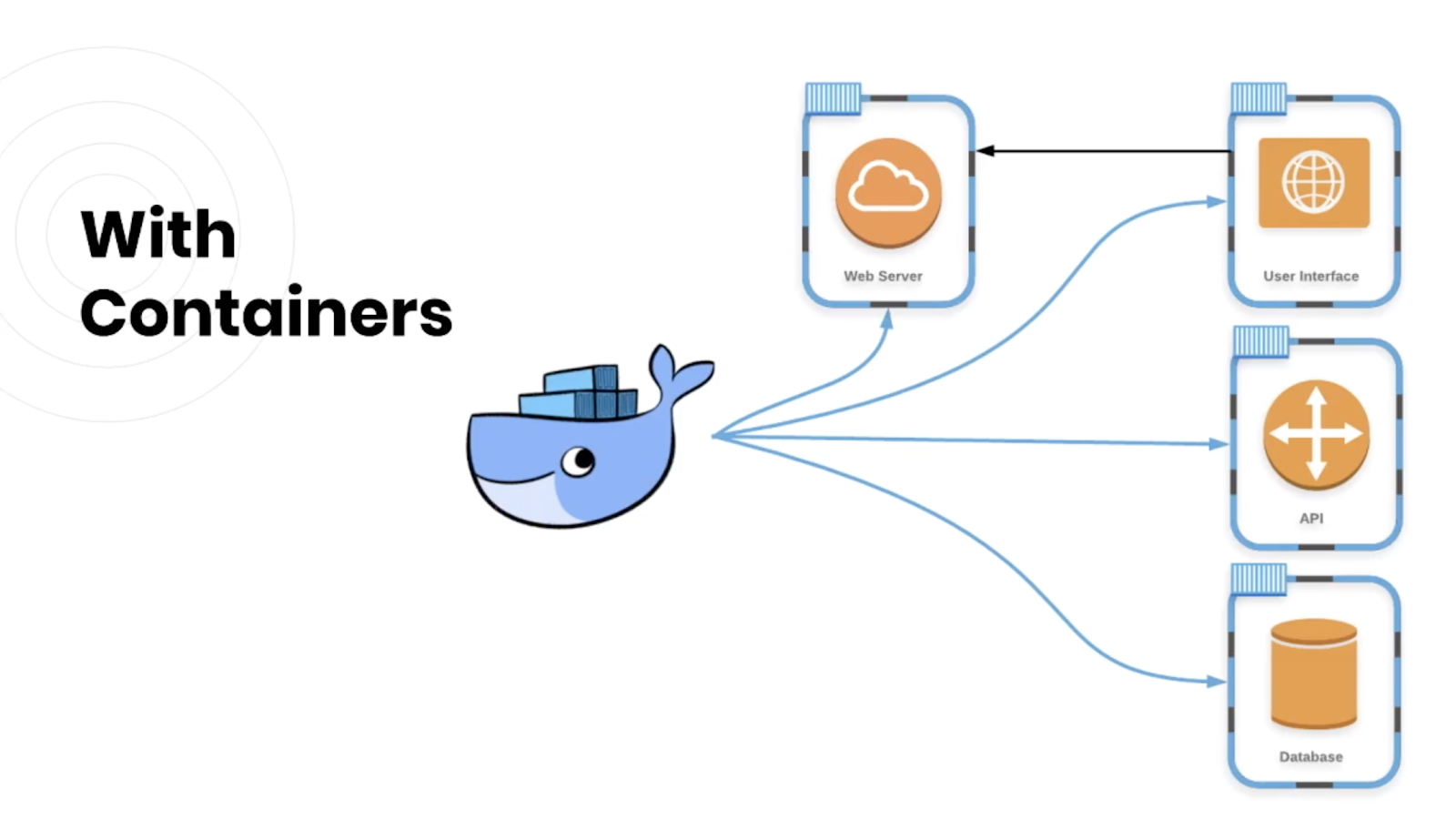

Let's take a look at our simple web app example, but using containers instead. We want our web app's image to have the exact configuration we want. Our code is in React + Typescript, but it is managing its own dependencies. Really, all we need is to specify the exact version of our front-end that we want to use.

We'll label it "DEV" so other DEV services can see and use it. We want the latest version from the Dev Branch and we'll automatically link it to the Web Server so the networking is done for us. We even put our Web Server into a container so we can spin both up at the same time and have them automatically connected!

If we defined each of our services as an image, we could containerize all of them and spin up our entire application with one command and have it all connected for us!

Why Docker with Grid?

All of this is very cool and sounds great, but how does this apply to running Selenium tests and why use Docker when some of you already have a Selenium Grid setup?

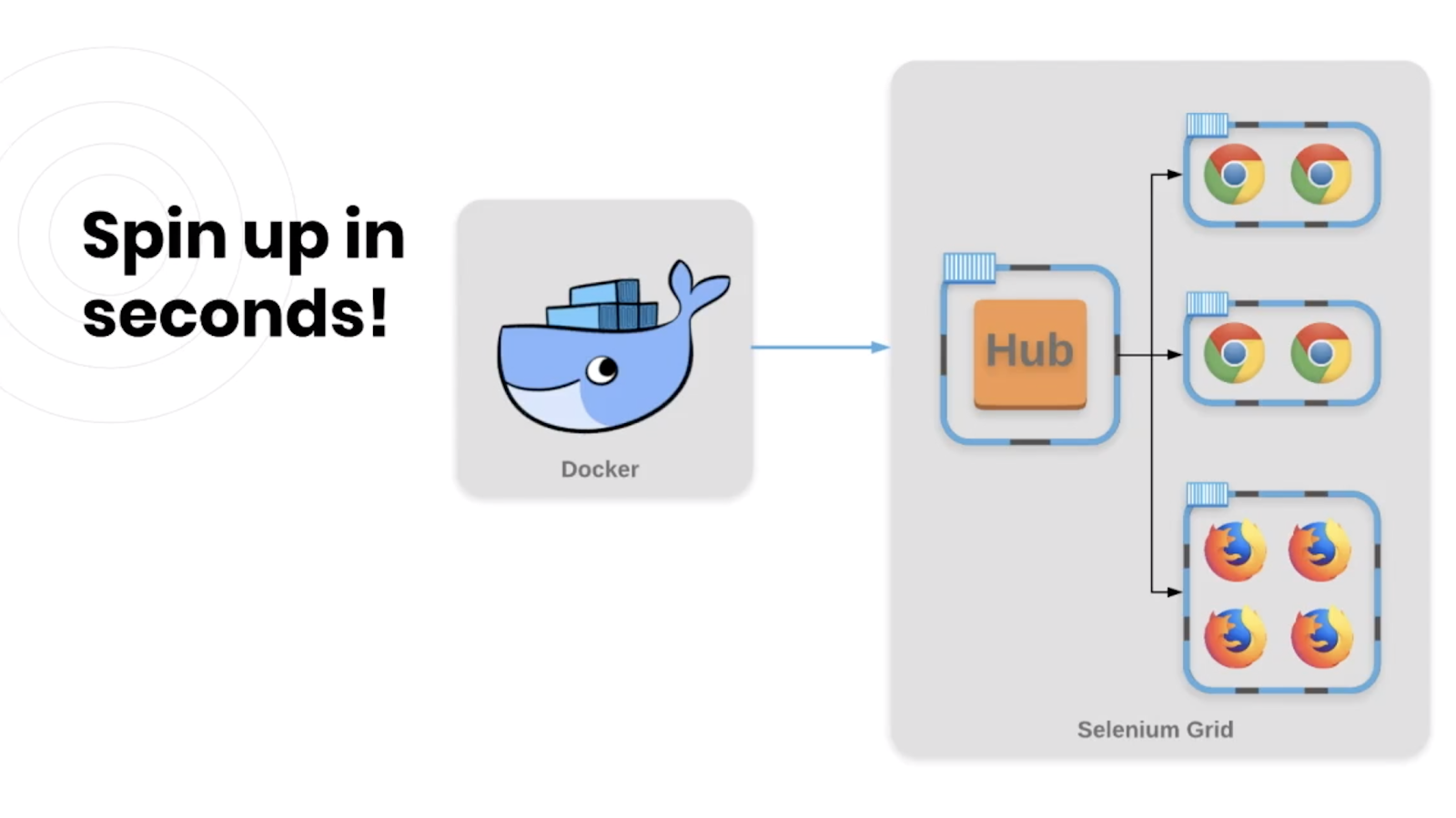

For starters, setting up Selenium Grid is also a manual and tedious task. You need to download and run the Selenium Server, configure your hub and nodes for each machine, and run multiple commands in the command line that can be verbose.

Other problems include not being able to easily manage versions of the grid or browsers, it's hard to manage your hub and nodes because they get into bad states, and a lot of it has to be done, again, manually!

In the context of this course, we will be using headless and non-headless Chrome and Firefox images and a Selenium Grid image to spin up as many containers as we need! With a few simple commands, we'll spin up, restart, and tear down an entire grid in seconds.