Transcripted Summary

At the end of our last chapter, we finished the minimal amount of code needed to actually run our test. We had the page objects with their full implementations.

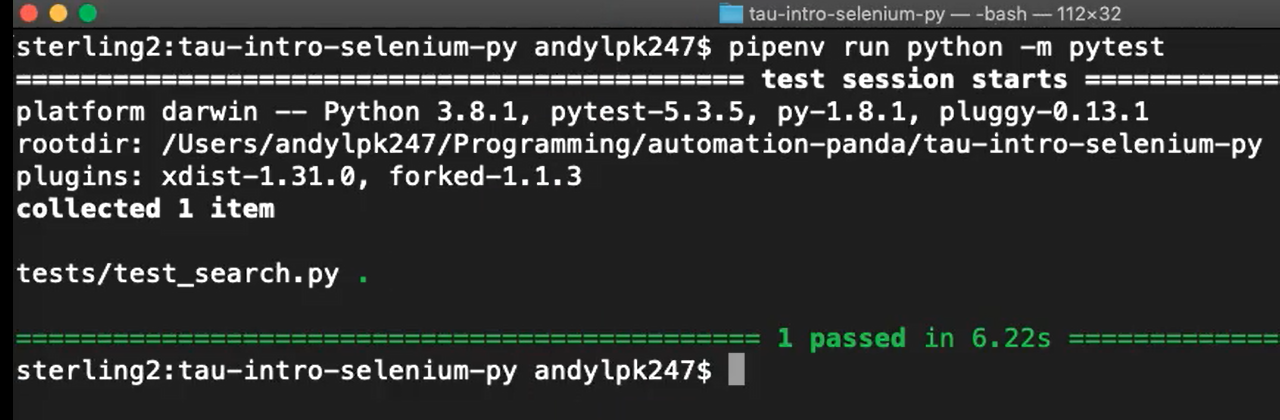

Let's run the test now to make sure that they actually work. We should see the browser pop up as pytest runs. It should complete all the steps automatically.

Nice. You can see here; our test was passing.

However, there was one small problem — this test right now can only run on Chrome.

That's because in our code, we had hard-coded Chrome to be the only WebDriver choice. What if we wanted to test other browsers?

Selenium WebDriver supports all major browsers.

In theory, our test should be able to run successfully against any browser.

You'll need to know which browsers and versions matter most for your product under test.

Furthermore, headless browsers are great for test automation.

A headless browser runs web pages but does not visually render them on the screen. This makes headless browsers run more efficiently. Both Chrome and Firefox have headless modes.

Typically, I use non-headless mode when developing automated tests and then switch to a headless mode when running tests in a continuous integration system.

Browser and headless mode aren't the only choices test automation needs.

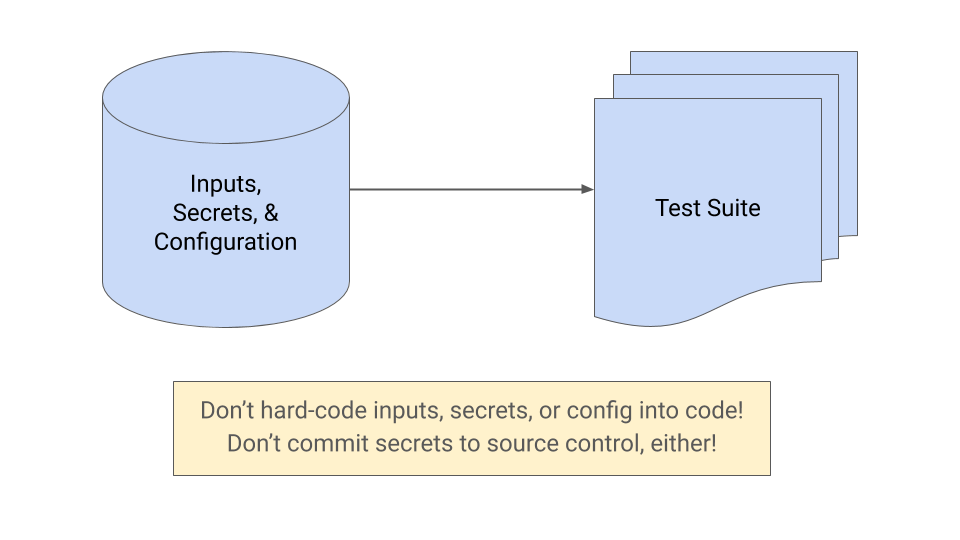

Tests usually need inputs like URLs, usernames, passwords, and even default timeout values.

Inputs need to be passed into the automation when a test suite starts to run.

They should not be hard-coded into the automation code, especially if they are sensitive values like passwords.

They should be handled separately, so that their values can easily be changed for different test suites. And also so that secrets aren't committed to source control.

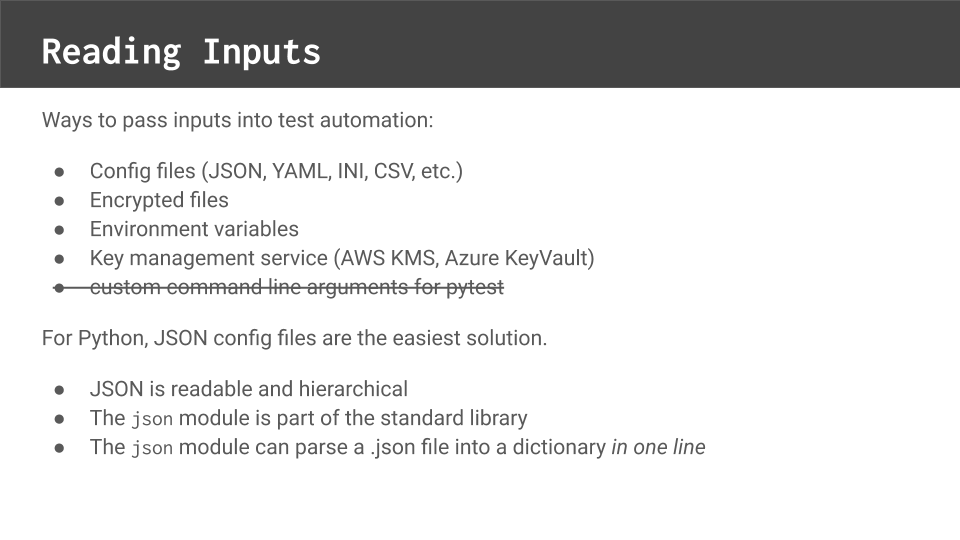

There are many ways to pass inputs into test automation.

One of the simplest ways is to use a config file.

A “config file” is simply a file containing input values. It should be a text-based file with a standard format like JSON or YAML for simplicity, readability and diffability. The automation can look for config files from a predefined path.

If the config file contains sensitive information, then the file could also be encrypted.

Another way to pass inputs into automation is by using environment variables.

Python can read environment variables using the os module. However, as inputs, environment variables are limited to one-dimensional String values.

A more serious way to read inputs is by using a key management service like AWS KMS or Azure KeyVault.

Key managers store secrets in safe places. Automation can make authenticated calls to receive the secrets. This solution is more complex, but also safer than plain-text config files. You might also need to combine the use of the key manager with another input method like config files.

You might be wondering if pytest can just take in parameters at the command line.

Although pytest has several helpful command line options, it unfortunately doesn't support custom arguments.

That's why we need to use another solution, like config files or key managers, for custom inputs.

For Python, JSON config files are arguably the easiest solution.

JSON is human readable and hierarchical. The JSON module is also part of Python's standard library, and it can parse a .json file into a dictionary in just 1 line. For our solution, we'll use a config.json file.

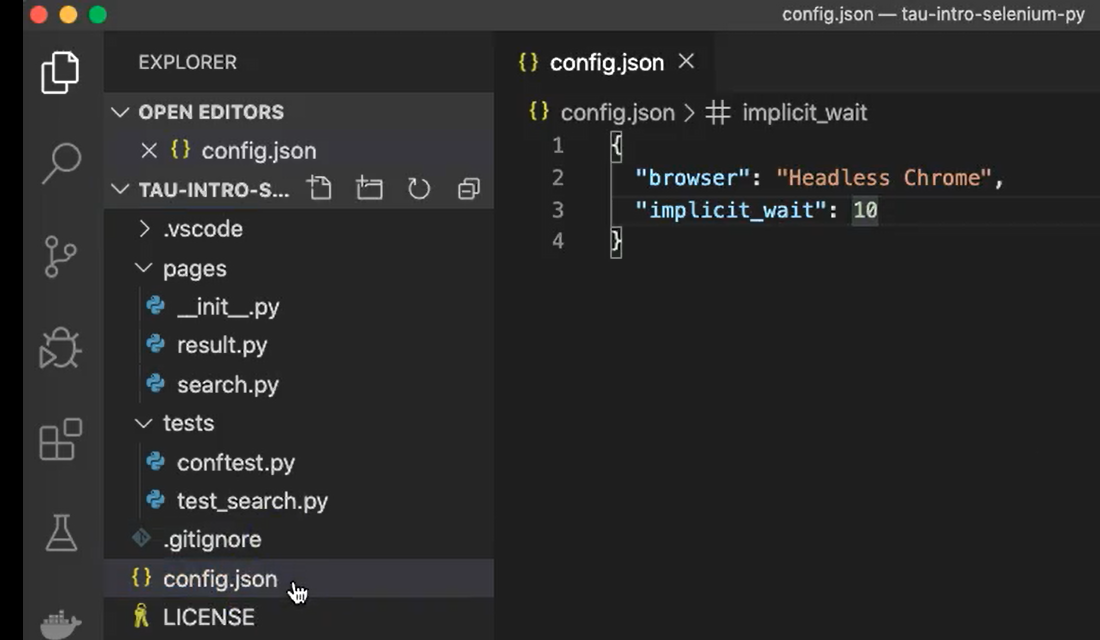

Let's add a config.json file to our project.

I have it open here in Visual Studio Code, and you'll notice I've added a config.json file into the root level of our project.

Our config file has two values.

It has a browser, which I've set to “Headless Chrome”, and it also has an implicit wait time.

# Example Code - config.json

{

"browser": "Headless Chrome",

"implicit_wait": 10

}

As you recall, in our browser fixture, we hard-coded in an implicit wait time for all interactions to be 10 seconds.

I'd like to pull that out as an input so it's more easily configurable.

The best way for our tests to read this config file will be to use a pytest fixture.

Let's open conftest.py.

Here, I've added a new config fixture and I've updated the browser fixture.

"""

This module contains shared fixtures.

"""

import json

import pytest

import selenium.webdriver

@pytest.fixture

def config(scope='session'):

# Read the file

with open('config.json') as config_file:

config = json.load(config_file)

# Assert values are acceptable

assert config['browser'] in ['Firefox', 'Chrome', 'Headless Chrome']

assert isinstance(config['implicit_wait'], int)

assert config['implicit_wait'] > 0

# Return config so it can be used

return config

At the very top, you'll see that I imported Python's json module. We'll need that for parsing.

Next, you'll see the new config fixture.

You'll notice in the signature that its scope is set to “session”.

The reason I've changed the scope is because I want to run this fixture only one time before the entire test suite. We don't need to repeatedly read in the same config file over and over again for every single test. That's a bit inefficient. And theoretically, if some dangerous party were to come along and change your config file while the test suite runs, it could mess up your whole test suite. So, read it once, be efficient, and be safe.

The config fixture has 3 steps.

First, we need to read the config file.

We read JSON files just like any other files with Python. The only trick is we'll use the json.load method to parse the textual content into a Python dictionary. We'll name that config.

If you're not familiar with dictionaries, they're simply key value look-up data structures.

The second step will be to validate the values that we read in from the config file.

We want to make sure that our values are good before we run any tests. And if we do find any problems, we want to abort right away, rather than wasting time running a whole bunch of tests that are set up to break.

I'm going to make sure that my browser type is good

I'm going to make sure that my implicit wait is an integer value

I'm also going to make sure that my implicit wait is a positive value

Finally, because this is a fixture, I'll need to return that config dictionary object.

Next, let's update the browser fixture. We'll see right away how it's changed.

@pytest.fixture

def browser(config):

# Initialize the WebDriver instance

if config['browser'] == 'Firefox':

b = selenium.webdriver.Firefox()

elif config['browser'] == 'Chrome':

b = selenium.webdriver.Chrome()

elif config['browser'] == 'Headless Chrome':

opts = selenium.webdriver.ChromeOptions()

opts.add_argument('headless')

b = selenium.webdriver.Chrome(options=opts)

else:

raise Exception(f'Browser "{config["browser"]}" is not supported')

# Make its calls wait for elements to appear

b.implicitly_wait(config['implicit_wait'])

# Return the WebDriver instance for the setup

yield b

# Quit the WebDriver instance for the cleanup

b.quit()

At first, you'll see that the browser fixture now depends upon the config fixture.

This means that the browser fixture will run after the config fixture runs, and it will receive a copy of that dictionary.

Also note, even though the config fixture will only run one time for the entire test suite, this browser fixture will still run once for each test case.

The first step looks a bit more complex than it did before when we initialized the WebDriver instance.

What makes it more complex is now instead of just hard-coding Chrome as my default browser, I have to check the config value’s browser value for whatever browser choice it has.

I use my if / else chain to then construct the appropriate WebDriver instance.

For Firefox, I make “Firefox”

For Chrome, I'm a “Chrome”

And for “Headless Chrome”, I'll need to use

ChromeOptionswith the “headless” argument for Chrome to run the headless browser. Then I just pass in thoseoptionshere when I initialize the Chrome webdriver.

If any of my choices aren't valid, I'll raise an exception here to end the test safely.

The second step also has a little bit of a change where we set the implicit wait time.

Instead of hard coding it to 10, now I'm going to set it to my config value's implicit wait value.

Other than that, the fixture is the same. We still yield the browser instance and we still quit at the end of a test.

Let's rerun our test, but now with Headless Chrome (making the change in our config file).

{

"browser": "Headless Chrome",

"implicit_wait": 10

}

As the test runs, the browser will become active, but we won't actually see it pop up on the screen like we did with regular Chrome.

Here, we can see the test still passes. So, all the changes we made for the config input work. Very nice.

If you noticed, when we added the config file to our solution, we didn't need to change the code in either the test case function or in any of the page objects.

The new WebDriver instance seamlessly passed through and worked. A smooth, sensible separation of concerns is a hallmark of good framework design.