Transcripted Summary

Let's talk about test speed.

Web UI tests are slow. They run much slower than unit tests and service API tests because they need to launch and interact with a live browser.

Even though our simple DuckDuckGo search test takes only a few seconds to run, most Web UI tests in the industry average 30 to 60 seconds.

When test suites have hundreds to thousands, and maybe even tens of thousands of tests, slow tests become a huge problem. One hundred 60-second tests will take an hour and 40 minutes to complete. 1,000 will take over 16 hours to complete.

Slow tests mean longer feedback time for developers and more painful integrations.

Teams should try to make tests run in as short a time as possible.

Let's inflict a bit of this pain on ourselves by adding more tests.

We can easily add more test cases by parameterizing our tests with multiple search phrases.

We just need to decorate our test function with @pytest.mark.parametrize.

# Example Code - tests/test_search.py

import pytest

from pages.result import DuckDuckGoResultPage

from pages.search import DuckDuckGoSearchPage

@pytest.mark.parametrize('phrase', ['panda', 'python', 'polar bear'])

def test_basic_duckduckgo_search(browser, phrase):

search_page = DuckDuckGoSearchPage(browser)

result_page = DuckDuckGoResultPage(browser)

# Given the DuckDuckGo home page is displayed

search_page.load()

# When the user searches for the phrase

search_page.search(phrase)

# Then the search result query is the phrase

assert phrase == result_page.search_input_value()

# And the search result links pertain to the phrase

titles = result_page.result_link_titles()

matches = [t for t in titles if phrase.lower() in t.lower()]

assert len(matches) > 0

# And the search result title contains the phrase

# (Putting this assertion last guarantees that the page title will be ready)

assert phrase in result_page.title()

The first argument is a comma-separated string of argument names. The second argument is a list of values or tuples.

Pytest we'll run the test function once for each entry in the list and it will substitute each value or tuple for the named arguments.

Our test case will run three times for the phrases "panda," "python," and "polar bear."

Pytest passes the value in by argument name for which this test is "phrase." The test can then use that value.

Let's run our parameterized tests. We'll use Headless Chrome.

There's the first one….

There's a second one. Wow, it's slow…

There we go, there's the third one.

So, we can see three tests took 19 seconds. That's still pretty good compared to industry averages, but it's also not great. You felt how painful it was to sit here and wait for just three small tests to complete.

So how can we speed up tests?

The best way to shorten total testing time is to run tests in parallel.

Parallel execution can be challenging to set up, but it's the only practical way to run large suites for slower tests.

Here's some advice for parallel testing.

First, use the pytest-xdist plugin to add parallel testing to pytest.

Not only can pytest-xdist control multiple test threads on one machine, but it can also distribute them across multiple machines. You can easily install it using pipenv install.

Next, make sure all tests are truly independent.

Sometimes one test is written to depend upon the setup or the outcome of another test. That's a big fat no-no. Each test should be able to run by itself without any other tests

Tests should also be able to run in any order. If tests aren't independent, then they will break when run in parallel

Furthermore, make sure that tests have no collisions. Collisions happen when tests access shared state

For example, if one test changes a user's password while another test tries to log-in as the same user with the original password, then the second test will fail

Finally, make sure each test individually has good performance.

Use smart waits instead of hard sleeps

Optimize navigation using direct URLs

Call APIs instead of using screen interactions to populate test data

Saving even a few seconds per test case can reduce test suite runs by minutes or even hours.

Let's try to run our parameterized tests in parallel.

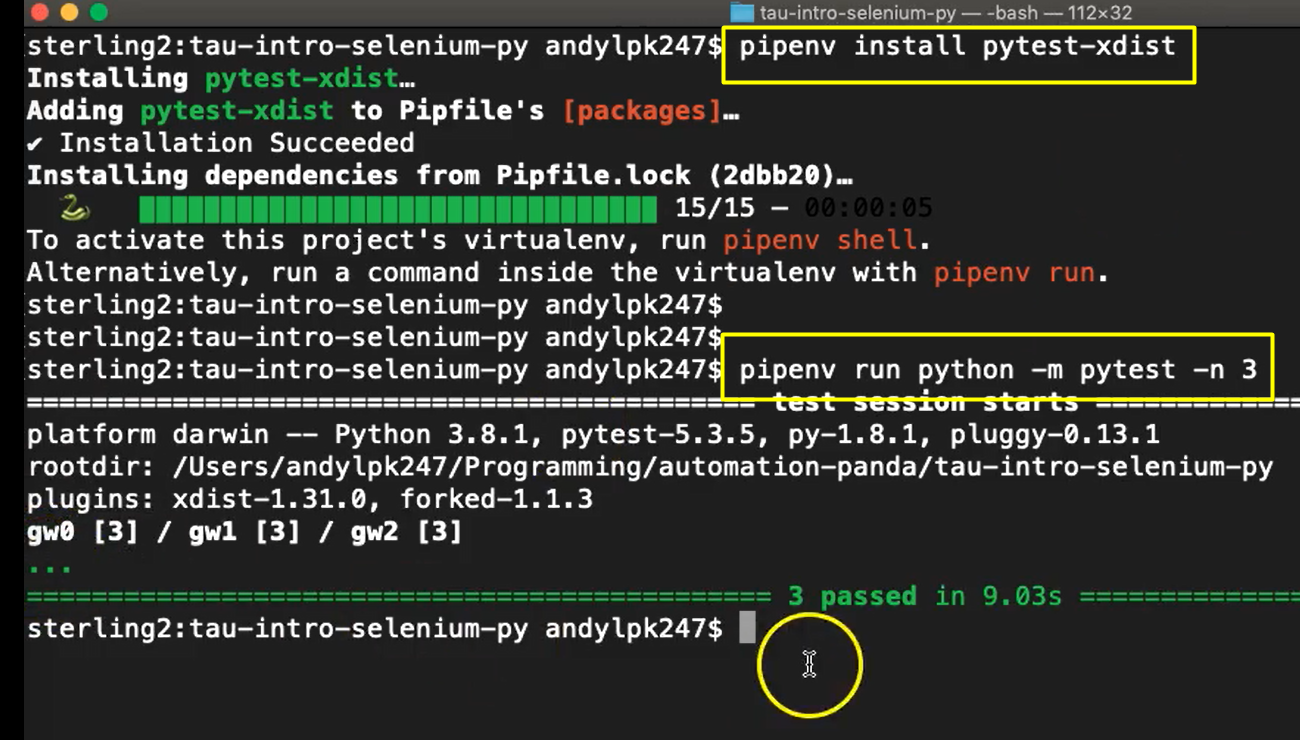

First, we'll need to install the pytest-xdist package using pipenv install pytest-xdist. It should only take about a minute.

And there we go, the plugin's installed.

Next, we'll run the pytest command. To run tests in parallel, you'll need to use the -n and then you provide the number of threads you want to run.

pipenv run python -m pytest -n 3

Since we have three parameterized tests, I'll run with three threads. And we're running with Headless Chrome.

So immediately we'll see that the pytest output is a little bit different than we saw before.

We also note that the test ran much more quickly — three tests in about 9 seconds. That's a lot better than 19. Imagine if we had, say a hundred tests. We'd be saving a lot of time.

Running tests on one machine is convenient but it doesn't scale well for large scale parallel testing.

That's where Selenium Grid can help.

Selenium Grid is a free, open source tool from the Selenium Project for running remote WebDriver sessions.

A grid has one hub that receives all requests, as well as multiple nodes with different browsers operating systems and versions. Automation can request a session with specific criteria from the hub and the hub will allocate an available session from one of its nodes.

Selenium grid enables not only parallel testing but also multi config testing.

For example, tests running from a Windows machine could request sessions for Safari on a macOS machine.

There are two ways to use Selenium Grid.

First, you could choose to set up your own instance and manage the infrastructure yourself.

On the other hand, you could pay for it as a service.

Several companies like Sauce Labs, SmartBear CrossBrowserTesting, and LambdaTest all provide cloud-based WebDriver sessions with extra features like logging, videos, and test reports. These services can be a great solution if your team needs to test multiple configurations or doesn't want to spend resources on infrastructure.

If you want to learn how to set up your own Selenium Grid instance with Docker, check out the Test Automation University course entitled Scaling Tests with Docker. Carlos Kidman does a great job showing how to quickly spin up Docker containers to build Selenium Grids with any number of nodes.

If you want to learn more specifically about parallel testing, check out my article To Infinity and Beyond: A Guide to Parallel Testing on automationpanda.com. That article goes into detail about prepping your tests for parallel testing and different ways to scale your tests.

Well, congratulations, you made it to the end of the course!

I really hope you learned a lot. But the fun doesn't stop here.

You now have a test automation solution written in Python that can easily be extended with more tests.

Since practice makes perfect, why don't you try to automate a few more DuckDuckGo tests?

Independent Exercises

The guided course covered one very basic search test, but DuckDuckGo has many more features. Try to write some new tests for DuckDuckGo independently. Here are some suggestions:

search for different phrases

search by clicking the button instead of typing RETURN

click a search result

expand "More Results" at the bottom of the result page

verify auto-complete suggestions pertain to the search text

search by selecting an auto-complete suggestion

search a new phrase from the results page

do an image search

do a video search

do a news search

change settings

change region

These tests will require new page objects, locators, and interaction methods. See how many tests you can automate on your own! If you get stuck, ask for help.

You could also add tests for other web apps, too.