Transcripted Summary

Organizing our actual tests is more than naming conventions and folder structures.

It should include adding contextual information, so that our tests can be more easily understood and maintained.

It also means having the flexibility to run tests in ways that best help inform our teams, while minimizing distractions from non-relevant information. Like tests that are flaky or have expected failures from unsupported features or operating systems.

Sometimes it can be challenging to convey what a test is doing, in just the test name. Or maybe your team has a specific naming convention, and you'd like to give a little more context when people go to read your tests.

# Description & Author

In cases like this, adding a description would be useful.

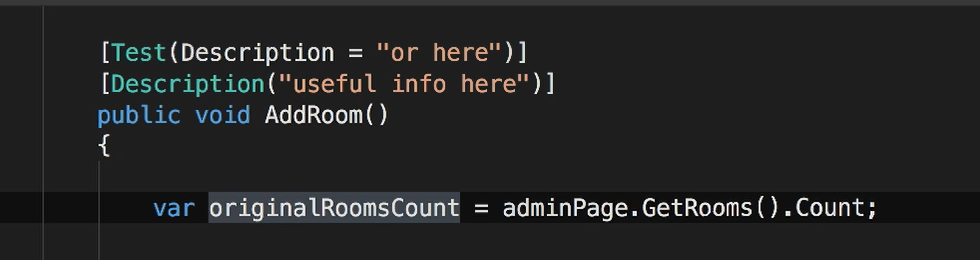

Descriptions can be added to both tests and fixtures in NUnit, in two ways.

The first method is to add the

[Description]attribute and enter your description text.The second option is to actually use the

[Test]or[TestFixture]attribute and pass it a description parameter.

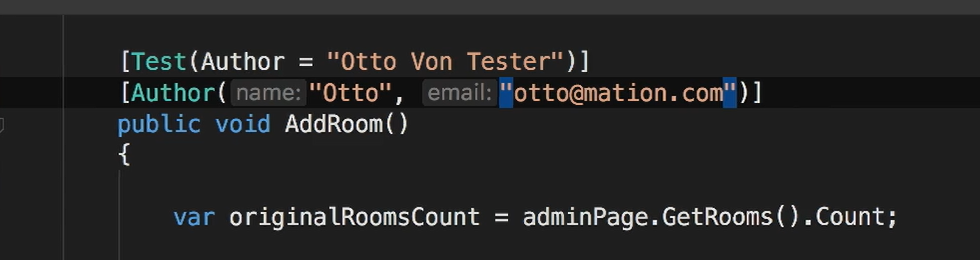

In larger teams, it can be useful to include ownership information alongside your tests.

Similar to description, you can do this by passing the [Test] attribute an argument for Author, or by using the [Author] attribute. And this can be applied to tests, or test fixtures as a whole.

The [Author] attribute has a name parameter and an optional email parameter.

And it's not something you'll see in the test output, but author is a property you could use as a filter when running tests.

# Category & Order

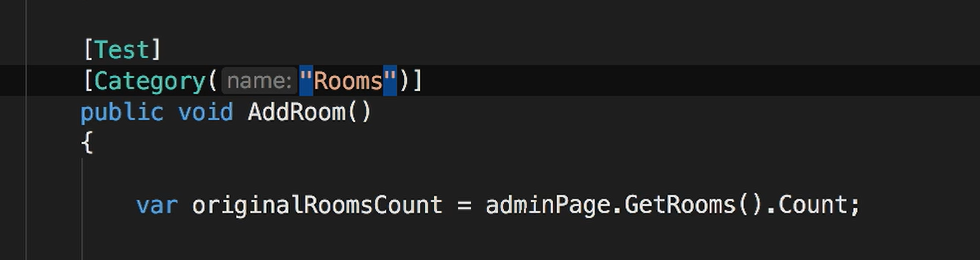

As your test suite grows, it could be handy to be able to run a specific group of tests, especially when you have groups or classifications of tests that cut across multiple fixtures.

We can do this using the [Category] attribute on either tests or fixtures.

And to use this, we just add the `[Category] attribute and include a category name.

Generally, you don't want to rely on the order your tests are going to be executed.

And there are a variety of reasons why it's usually best not to rely on that order, but from a self-centered perspective, consider the potential pain you're going to have maintaining those tests.

If you have a problem in one test, how does that affect the other tests? And then how much more difficult is it going to be trying to debug a single test, when they rely on other pieces of other tests?

By default, NUnit runs tests in each fixture alphabetically.

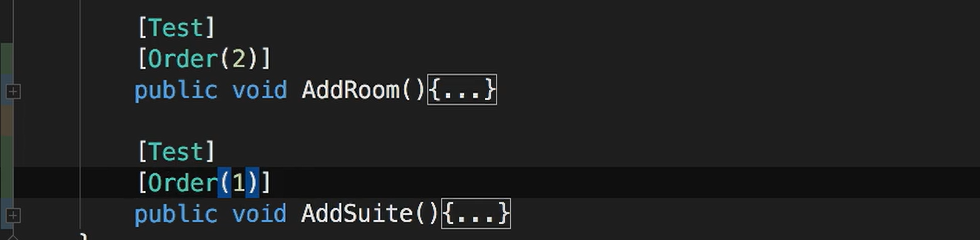

And if you do need to have a specific test order, don't worry you won't need an elaborate naming convention.

You can use the [Order] attribute on both tests and fixtures, and just pass in an integer for the order of which you want them executed.

Using the [Order] attribute, tests are going to be run in ascending order, but any tests with the order attribute, is going to be run before tests without the [Order] attribute.

If there are multiple tests that use the same order number, there's no guarantee which order they're going to be run.

# Platform

You may have tests that only need to be run on certain operating systems or on certain .NET versions.

The [Platform] attribute lets you filter your tests automatically at execution time by operating system, .NET runtime, or architecture.

To specify a platform, we add the [Platform] attribute, and then pass the platform name as a String.

For multiple platforms you can pass a comma separated String of platform names.

The attribute also supports parameters for including and excluding platforms, as well as providing a reason to explain that choice.

The NUnit documentation has a list of supported platforms, but it looks a little out of date. If you actually look at the source on GitHub, you can find the actual list inside the platform helper class.

What happens when you use this attribute, is when you run your tests, NUnit will go and check the OS platform information where the tests are actually running and compare it to the values you've specified.

So only tests that can run, will be run.

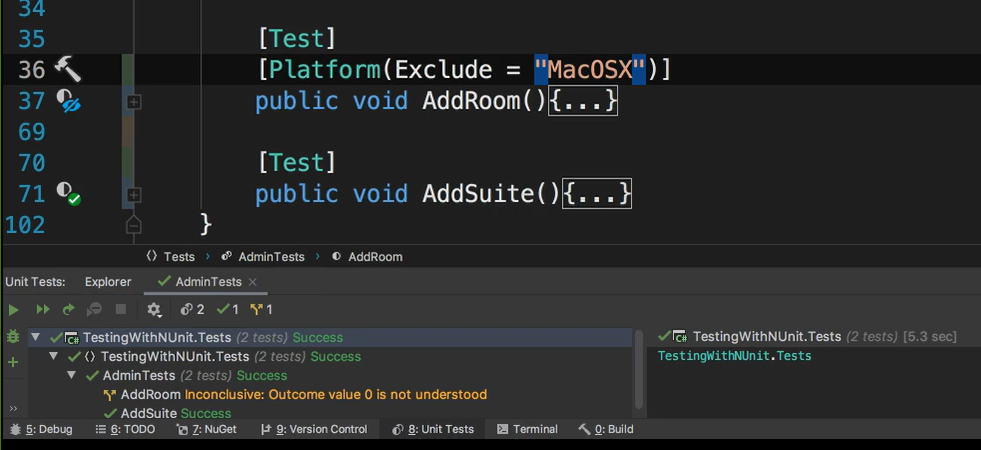

Since I'm using a Mac for this course, my platform is MacOSX, and if I add the [Platform] attribute to a test and exclude my platform and try to run the tests, you'll see that AddRoom is not run and is flagged inconclusive.

Per the NUnit docs, the test should be skipped and not affect the outcome of the test run at all.

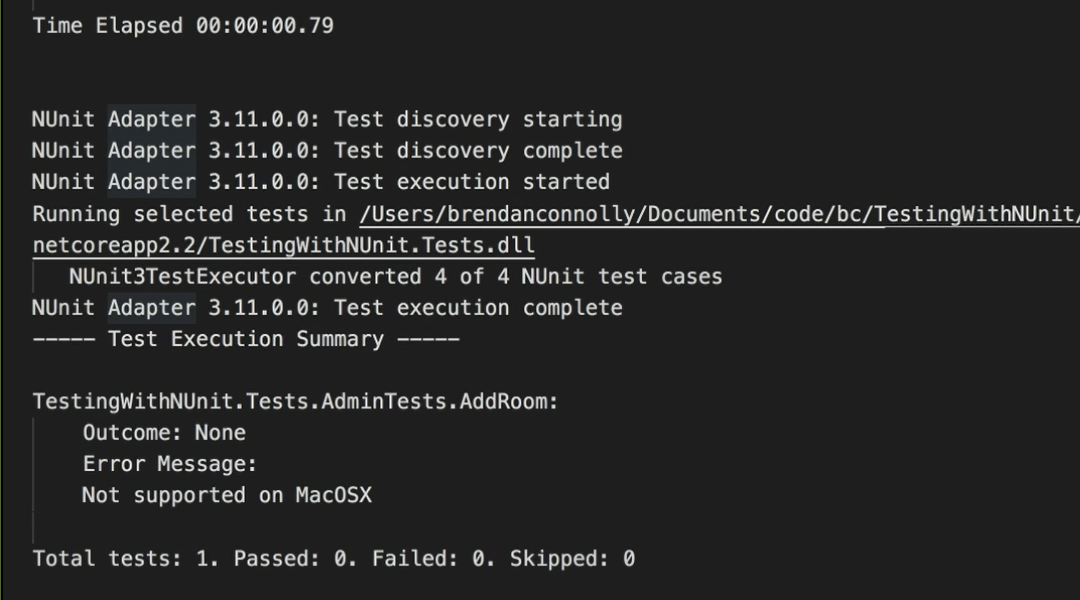

We get a better sense of that by looking at the actual console output.

And you can see the outcome was “None”, with the error message “Not supported on MacOSX”.

# Skipping & Ignoring

As you build out your test suite, there are times when tests get added but by default you don't want to run them.

They might be slow or unique cases, so you only want to run them when you specifically choose to, rather than including them every time you run your tests.

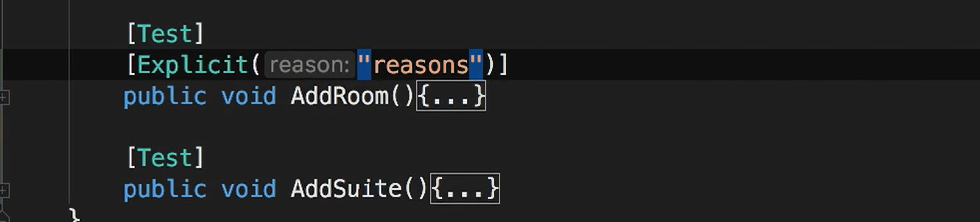

To do this, we use the [Explicit] attribute.

We add this to a test and now when all the tests and the fixture are run, this test will be skipped. And I can still go to that test and execute it on demand.

This attribute could be on test or test fixtures and has an optional parameter for providing a reason.

If we run all the tests now, you'll see the test that we marked explicit is ignored,

But if I go and specifically run that test, you can see that it's executed and still passes.

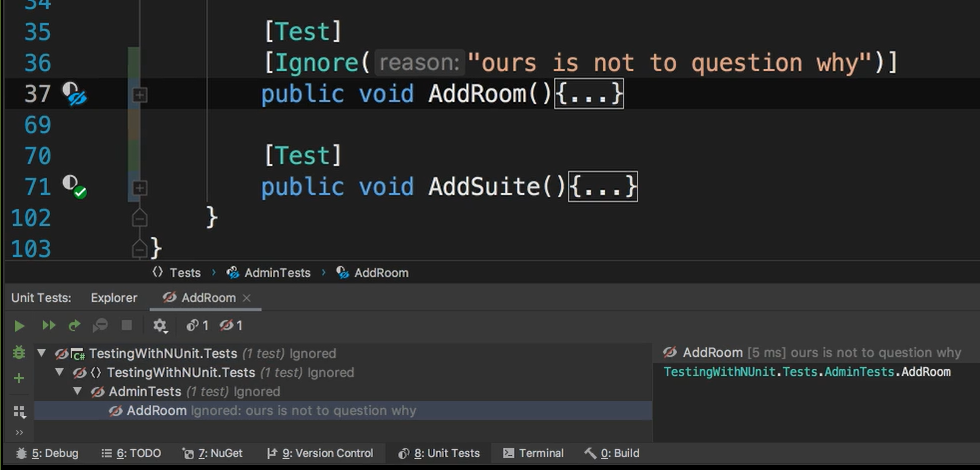

NUnit also provides the [Ignore] attribute.

To use it, we add the attribute to a test or fixture, and in NUnit 3 and above, we're required to include a reason.

Now, if we go and run these tests, a warning result will be displayed.

In addition to a reason, there's also an optional Until parameter.

And to use it you must pass a String that can be parsed into a date.

Using Until ignored test will continue to get a warning result until the specified date has passed. After that date, the test will start executing again automatically.