Transcripted Summary

Data-driven tests are a nice way to add variation to your test suite, while at the same time, reducing the boilerplate or repetitive code for cases when you need tests that perform the same actions, just with different datasets.

They can also provide a nice option for your less technical testers to contribute to automation by allowing them to add different permutations of data and not necessarily having to know how to code.

NUnit has a wide variety of different options for making your tests data-driven; the first we'll take a look at is parameterizing your tests.

# Parameterizing Tests

To enable our test to be data-driven, the first step is to create a test method that accepts parameters. The parameters to the test will serve as a means for NUnit to funnel new or different data into your test.

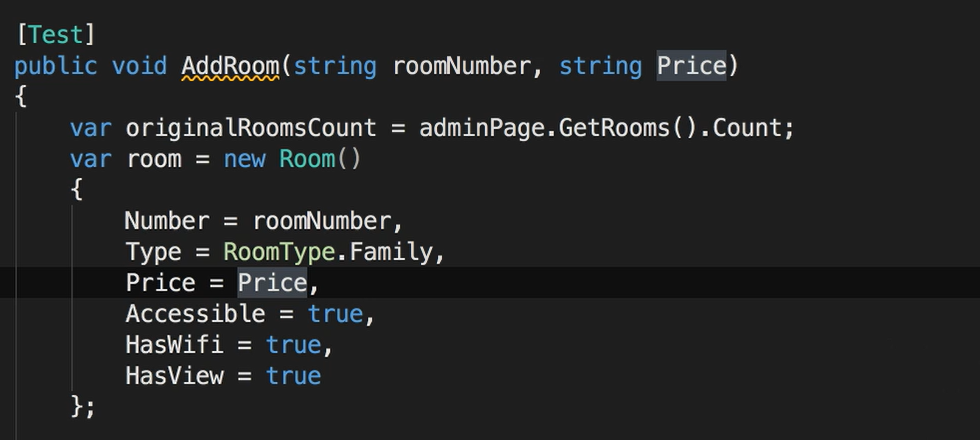

We'll take a look at our AddRoom test, I'll add a String parameter for “roomNumber” and for “Price”.

I've updated the test to use the new parameters, now we just need to tell NUnit what data to use.

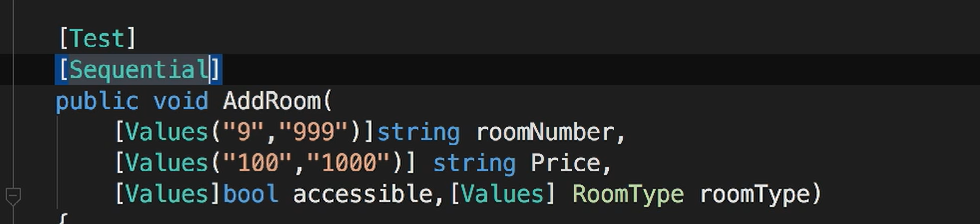

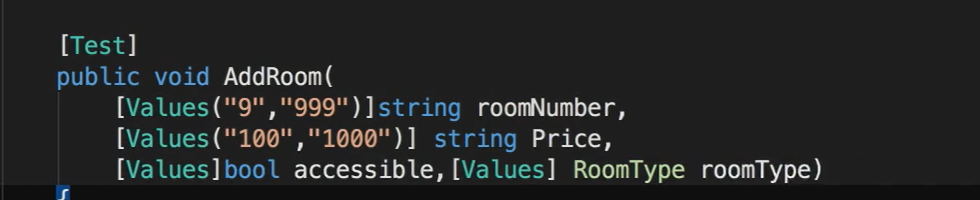

The first way we can pass data into our test is using the [Values] attribute.

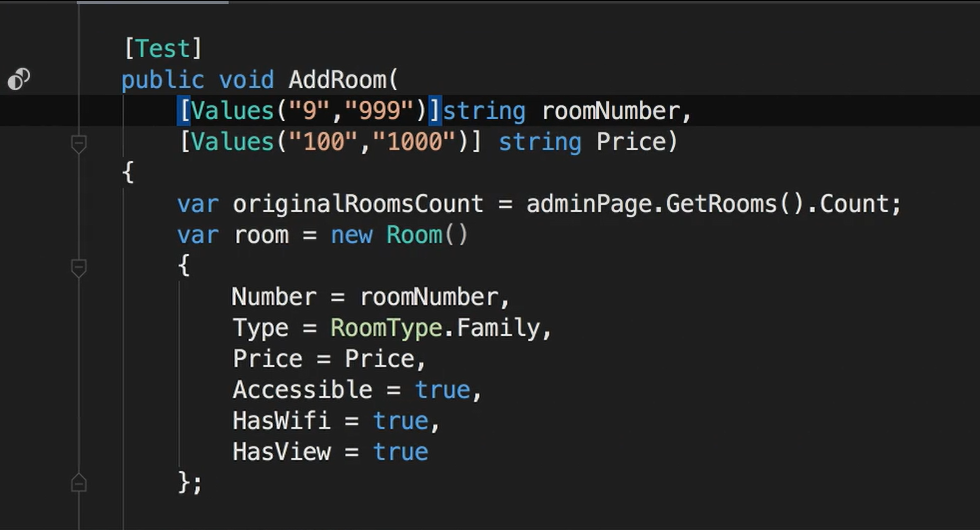

Unlike other attributes that we've used so far, that have gone on the test fixture class or on the test method, the [Values] attribute is specified for each parameter of the test.

First, we'll add the

[Values]attribute to the room number parameter and add two values: 9 and 999.Then we'll add a

[Values]attribute for our price parameter and include two values: 100 and 1,000.

By default, what NUnit will do is create a test for all combinations of those values and we can see this by looking at the test explorer and you can see four tests have been created.

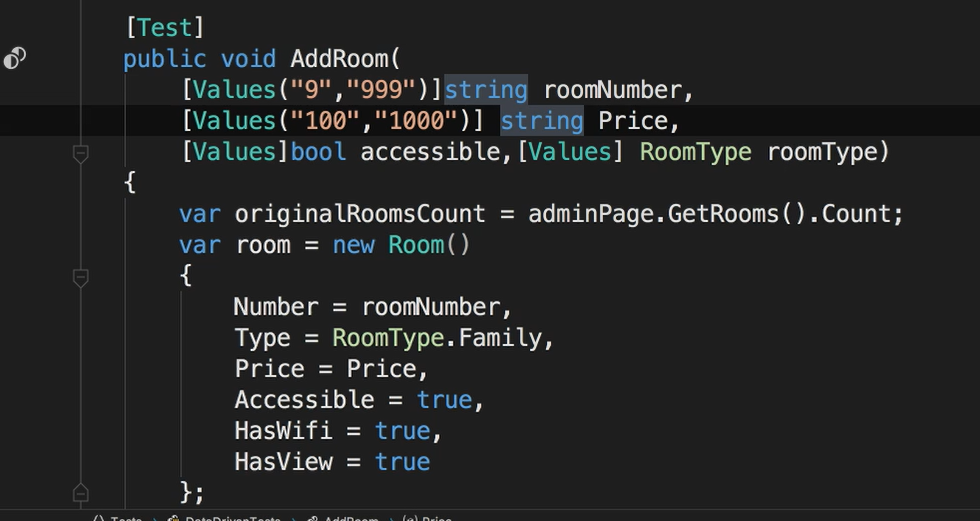

Let's also add parameters for accessible and for room type.

The Values attribute has built-in support for enums and boolean parameters. So rather than to have to enter “true” and “false” for booleans, or include each value in an enum, you can just enter values without specifying any arguments and NUnit will automatically include all of the values.

Since, by default, NUnit uses a combinatorial approach and generates a test for all combinations, in this example we'll have 40 tests.

That's the 2 room number times the 2 price options times the 2 boolean options, then times 5 for the different room type options. So, what starts off well-intentioned, can result in explosive growth in your number of tests.

To help with this, NUnit provides two other attributes that give you some additional controls over how the values are combined to generate tests.

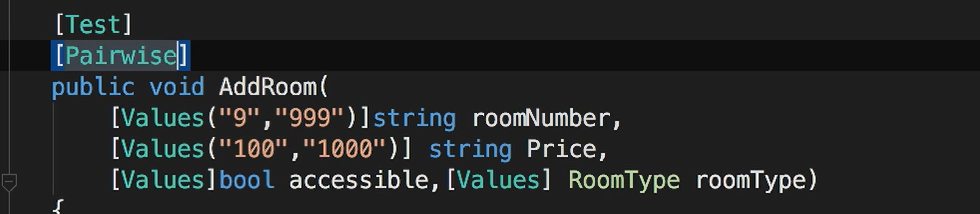

The first is the [Pairwise] attribute.

So rather than have tests for all the possible combinations of parameter values, this will only generate tests for all the unique pairs of those values. When we add the [Pairwise] attribute to our test method, the number of tests has shrunk from 40 to 10.

The other option is the [Sequential] attribute.

This will cause NUnit to use the order of data values to create test cases.

So, for example, the first test will use the first value in each of the values attributes.

Also, for this to work correctly, you probably want to have equal numbers of values for each parameter, otherwise you may end up with invalid test cases.

In addition to the [Values] attribute, there are two other attributes that you can use to generate values for numeric parameters.

The

[Random]attribute lets you specify a minimum and maximum value to generate a random number for when the test is executed.There's also the

[Range]attribute to specify a series of numbers to be used for an individual parameter.

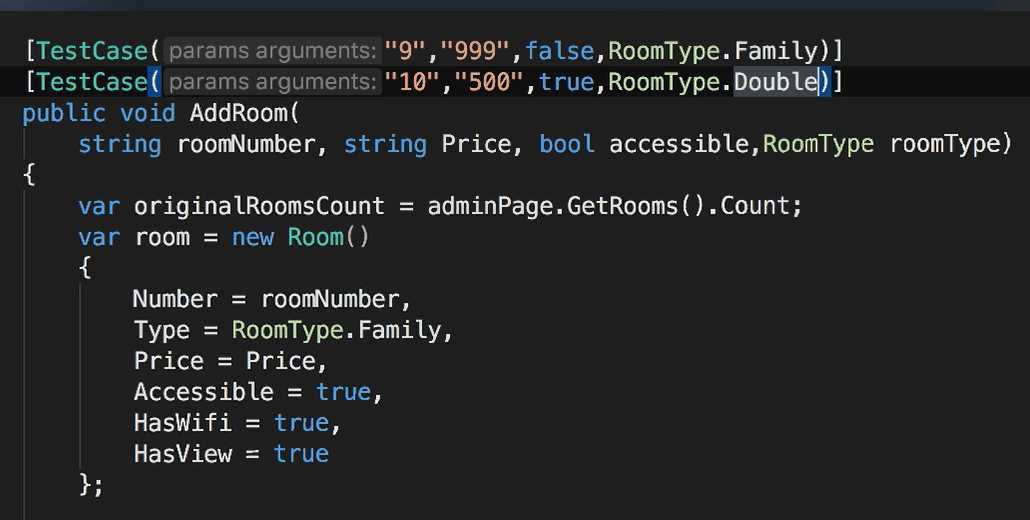

Another way to parameterize tests is to use the [test case] attribute.

To use this, we'll replace the [Test] attribute with the [test case] attribute.

Instead of using the [Values] attributes for each parameter of the test, we pass the parameters you'd want for this specific test case as arguments to the [test case] attributes, and this is going to be in the same order as the parameters of the test method.

Then, for each permutation of this test that you want to run, you'll add another test case attribute and include parameter values designed for that test.

This removes some of the “magic” that using the [Values] attribute could introduce.

Each test permutation is clear listed separately above the test method signature, there's no question what inputs will be passed to the test.

The [Test] attribute also has optional name parameters for things like test name and description and this allows each test case to have a clear intent.

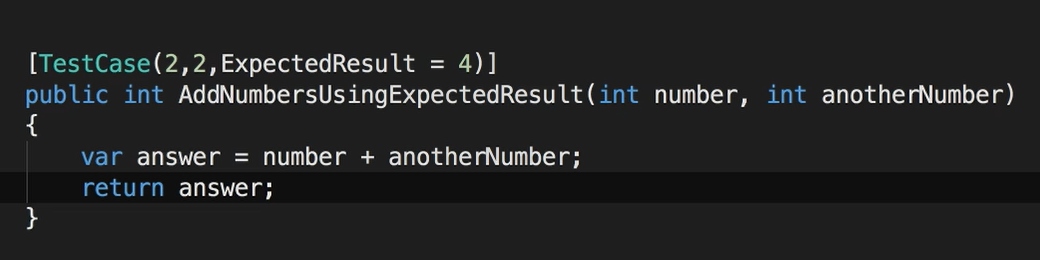

There is also the ExpectedRresult parameter and you can use this to specify the expected value of a test.

To use this, your test method is going to need to return a value instead of void.

Then, if we add the ExpectedRresult parameter to the test case, NUnit will automatically compare the return value of the test method to the result of the value specified in the test case attribute.

When you use this, the test itself is not going to contain an Assert statement, so this approach may rub some people the wrong way

And I'm sure there's some circumstances where this is a good fit, but I prefer not to have an implicit assertion inside of my test.

# Sharing Data

So far, we've supplied data individually to each test and this can get a little repetitive.

For example, in this case, we have a string parameter for the room price and perhaps, across our application, we have a variety of currency formats that we need to support.

Or maybe you want to use values from something like the Big List of Naughty Strings or Elisabeth Hendrickson's test heuristics cheat sheet. Rather than copy and paste that into every test, we want to share it in a central common place to be used across tests.

For sharing data for a specific parameter of your test, you can use the [ValueSource] Attribute.

ValueSource works very similarly to the Values attribute, except that instead of passing literal values, will provide the name of the source that will provide the data as a String.

The data source can be a static field, property or method that takes no parameters.

The source also needs to return an IEnumerable of the type that the parameter expects.

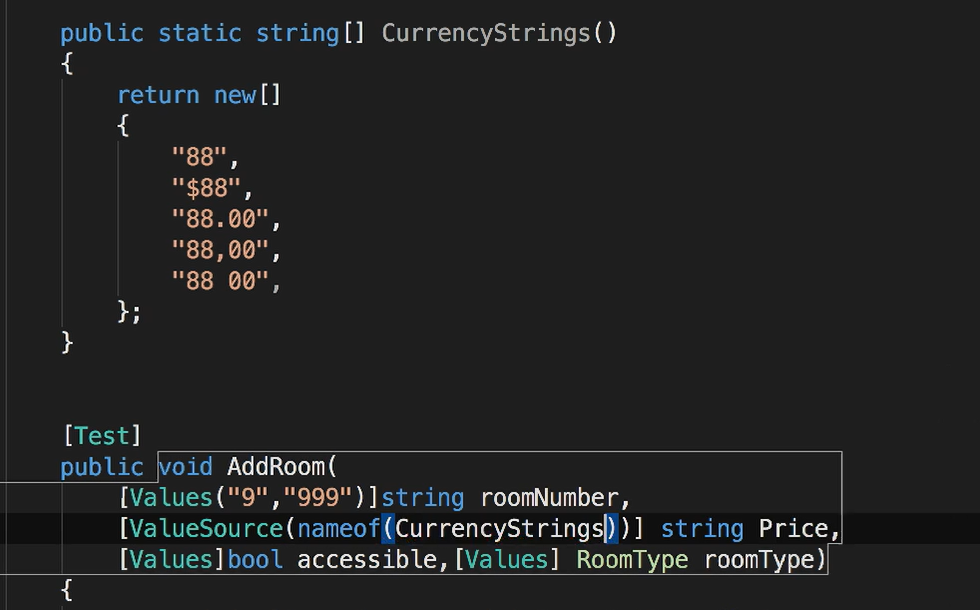

To see this, I'll add a static method to our test class that returns a String array of some different currency format. And let's call the method CurrencyStrings.

To use it, we'll add the [ValueSource] attribute to our price parameter and rather than use the String will use C#'s nameof method and this will return a string value of the method's name.

What's nice about using nameof is that now our ValueSources method name is no longer a magic string. So, if the source method name changes, our editor or the compiler will let us know that the names don't match.

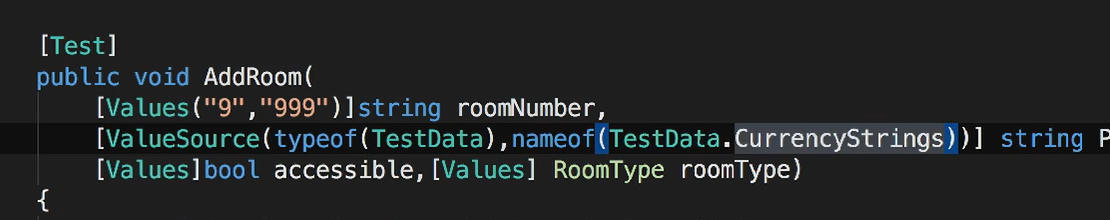

If you want to centralize your data sources into separate classes, you can also do that.

I've created a new class called TestData and moved the CurrencyStrings method into it.

To use this method, we update the ValueSource so that the first argument is the type that contains the source method.

To do that, we'll enter a typeof and then our class name and then, for the second argument, we'll use the name of the method with the method's name.

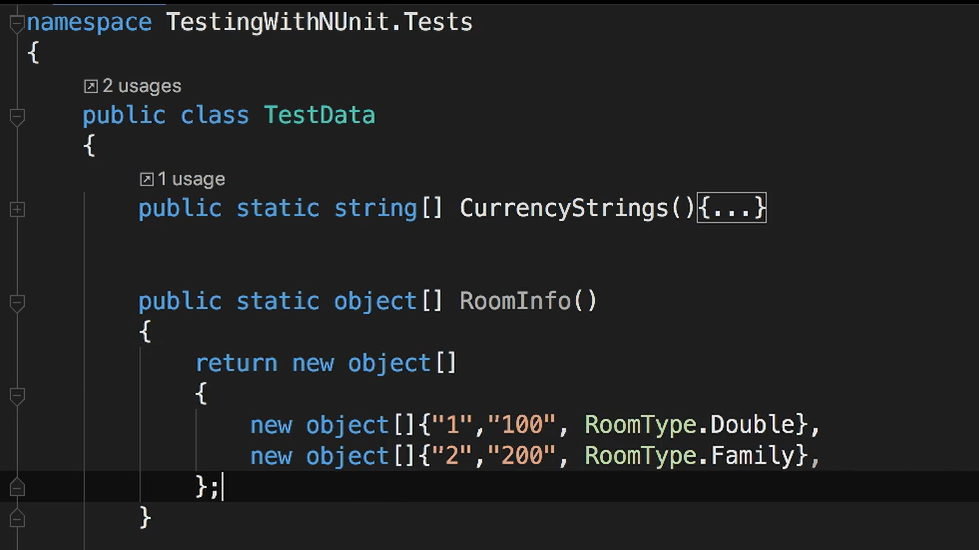

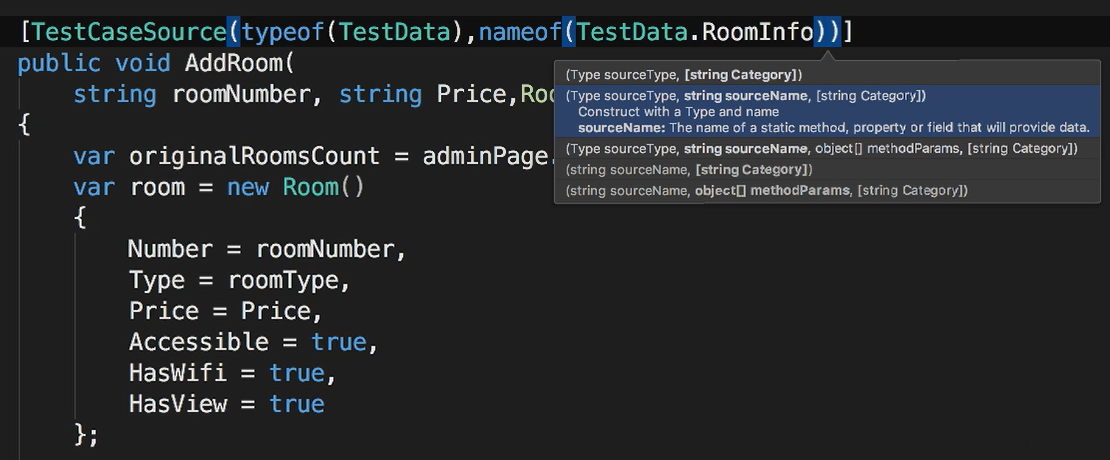

The [TestCaseSource] attribute is meant to provide all of the parameters for a task and it works similarly to the ValueSource where the data source can be a static field property or method that returns an IEnumerable type.

The values returned need to be compatible with the parameters of the test method signature.

I've updated the test to accept 3 parameters: room number, price and room type.

To hold our test data, let's add another method in the TestData class called RoomInfo.

Since our test method signature accepts two Strings and a room type, we'll use an object array to hold the different types of values for each test case.

To use it, we'll go back to our test and add the [TestCaseSource] attribute to our test methods, and in it we'll include our type and method name.

TestCaseSource can also use methods that accept parameters and if you need to parameterize your test case source values, then you need to pass those arguments as an object array to the TestCaseSource.

Another option we can use to return data from a test case source is TestCaseData.

This is actually my favorite way to create data-driven tests because it adds so much flexibility to how you can use data to drive your tests.

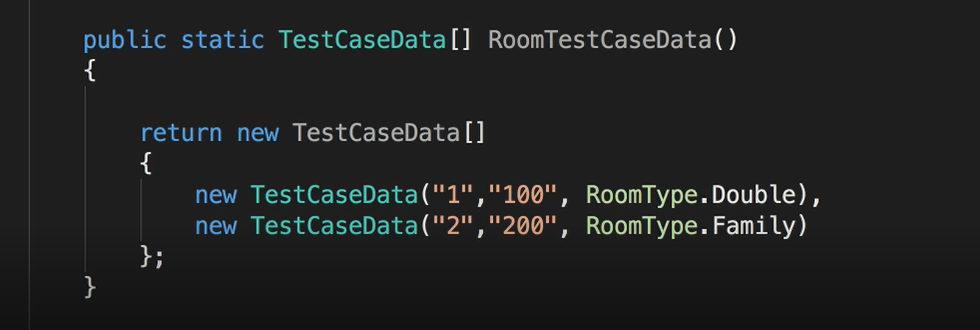

Inside the TestData class, I've added a new method called RoomTest caseData — and this is a copy paste of the room data method, but instead of an object array, I'm using arrays of TestCaseData.

The constructor of TestCaseData will accept the arguments that we need to pass to our tests, but in addition to passing arguments, TestCaseData has methods that allow you to add attributes on that test case.

We can give each test case a name, a description, test cases can have their own categories assigned to them or be flagged as explicit or ignored. This group of tests can be fully explained, grouped, executed, and managed outside of the actual test code.

So far, we've been passing a series of parameters that are being used to set different properties on the room object.

Rather than do that, wouldn't it be nice and cleaner to just pass the instance of the room object, itself instead of assembling it inside of the test?

Instead of hard-coding values into our test data, we can store it inside of a JSON, XML or CSV file or even in a database.

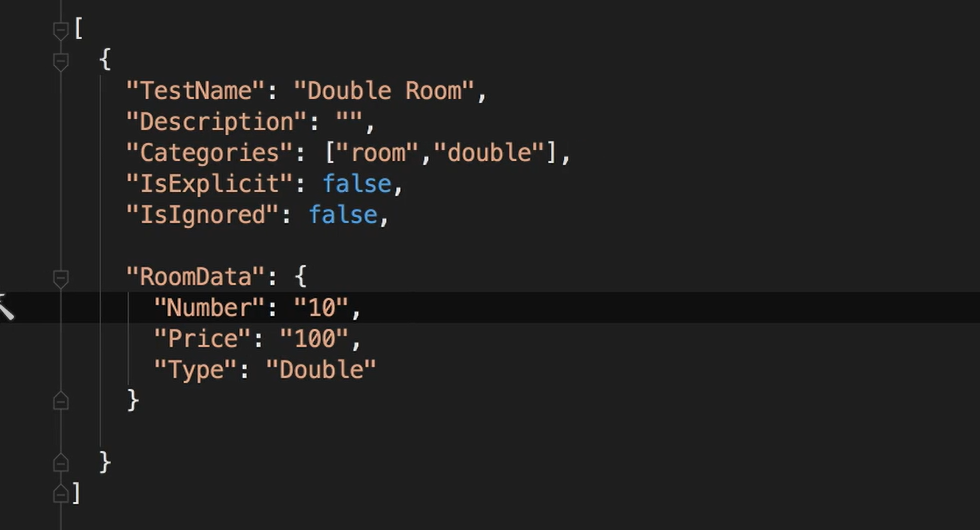

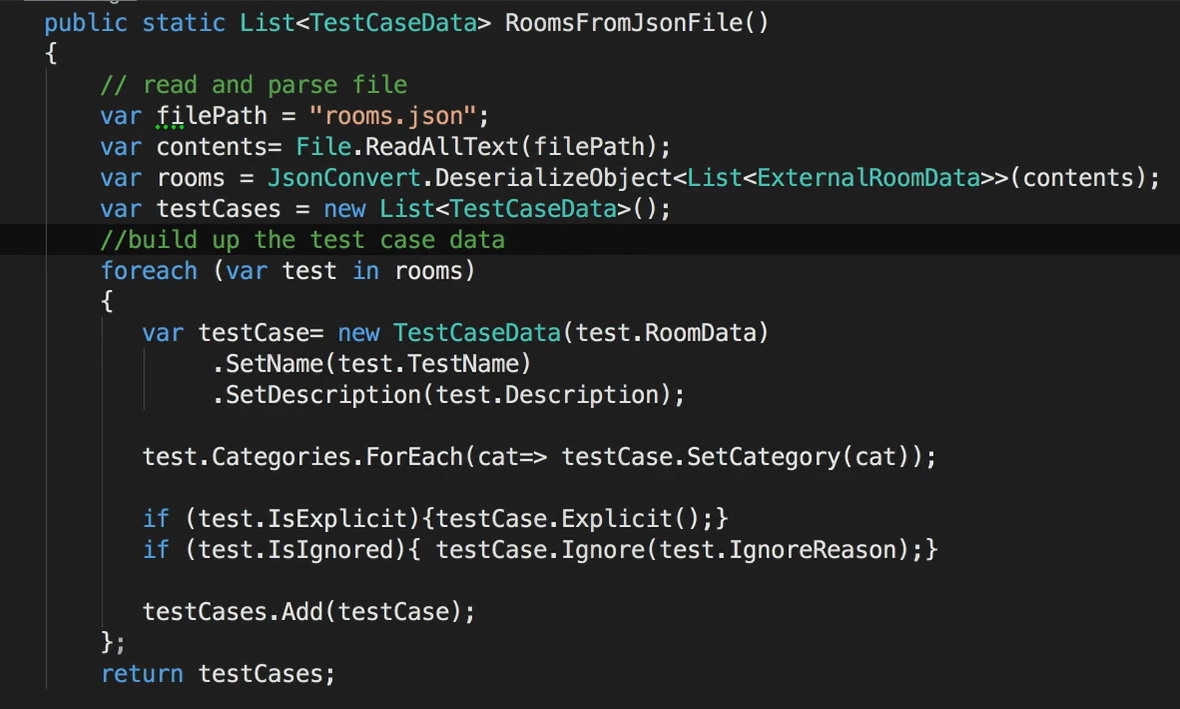

In this example, I'm reading a JSON file that contains room data and properties of a test, including its name, an array of categories and flags for explicit and ignore.

Then, in our TestCaseSource method, we'll read the JSON file and build up a List of TestCaseData.

This will include the room parameter for the test as well as setting properties of the test defined in the JSON file.

By combining this external data with NUnit's test case data, we're able to dynamically build and manage the test suite from any external source.

# Parameterizing Fixtures

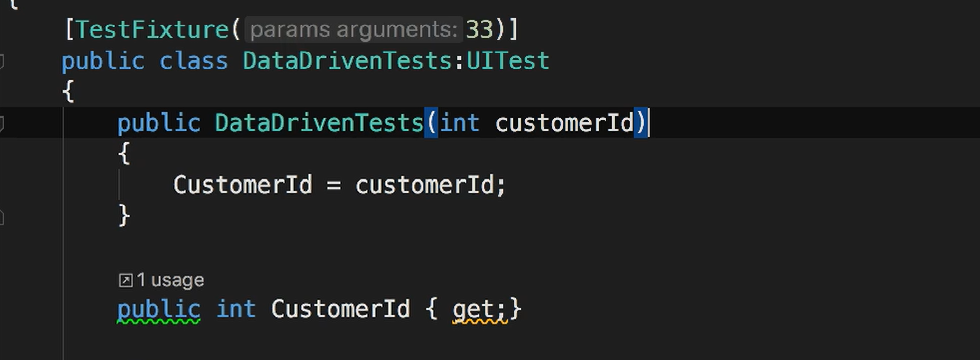

Test fixtures can also be parameterized, and this allows your test setup and tear down code to be dynamic as well as your test.

To parameterize a fixture, we need to do two things:

First, we add a constructor to our class that will accept the parameters that we want to provide.

The second step is to add a text fixture attribute, and just like we did with

test case, specify the value that we want to pass to the constructor when the fixture is loaded.

Test fixtures can also use TestFixtureSource and TestFixtureData just like we do with TestCaseSource and TestCaseData.

You can also use generic classes as your test fixtures. And can then vary the type of the class by specifying the type to be used as an argument to the test fixture attributes.

This may not be something you commonly need to use, but it can come in handy for unit testing different types.

# Theories

NUnit has another concept called theories.

Per the NUnit documentation, a Theory is a special type of test used to verify a general statement about the system under development.

When we create normal tests, particularly data-driven tests, we are providing specific examples of input and output.

But a Theory isn't meant for specific data values, it's meant to prove, given a general set of inputs, that all of the assertions will pass, assuming that the arguments satisfy certain assumptions.

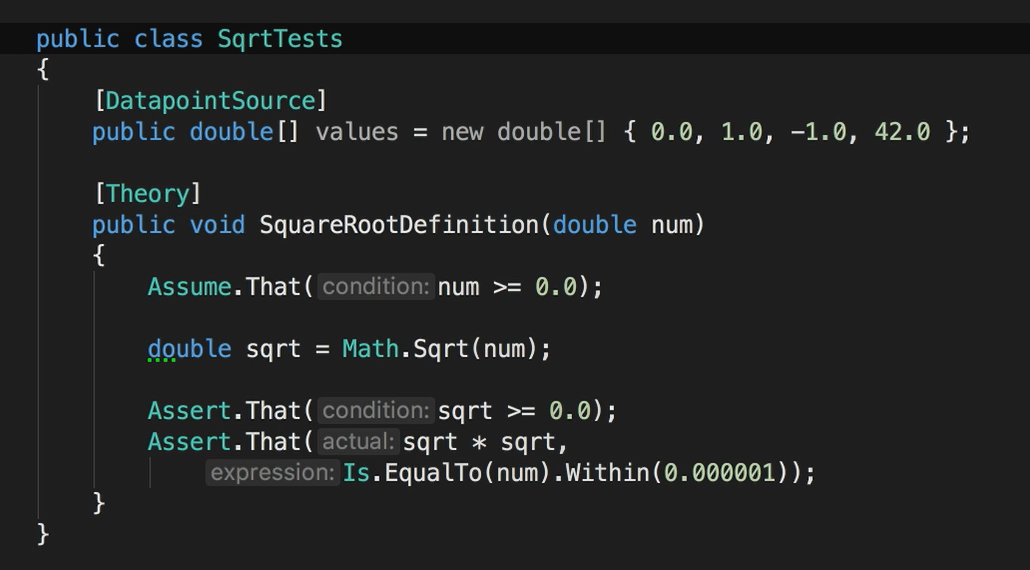

To create a Theory, instead of the [Test] attribute, we use the [Theory]attributes.

And the test method is required to accept parameters to verify the theory.

Data for the Theory is meant to be provided by a method, property or field inside the test fixture marked with the [DataPointSource] attribute.

When the Theory is run, NUnit automatically assembles all data points that match the types required for the test parameters and then supplies them to the test.

This is a pretty specialized use case. Notice how there's only the Theory attribute. There's no other attribute or visible code that indicates that this theory should use this DataPointSource.

NUnit is going to map these up because the theory parameter and the data point source both used doubles.