Transcripted Summary

In this chapter, we'll explore how to run tests in parallel.

Being able to run tests in parallel is essential, especially when running tests in the pipeline.

Remember, while discussing the Continuous Testing challenges, we discussed how testing can become a bottleneck because the test, especially the end-to-end test, can take so long to execute.

Parallel execution of the test is one of the ways to avoid continuous testing becoming a bottleneck in the pipeline.

The most obvious candidate for parallel testing is running tests with multiple configurations.

That is - when we need to run the same suite of tests with different configurations, like on different browsers, different viewpoints, and so on.

Let's see how we can make use of parallel execution to speed up tests that need to run with different configurations.

Say we have a test suite that we would like to run on more than one browser - say, Chrome and Firefox.

Now, a naive approach would be to duplicate this task and set the browser to Chrome in one and to Firefox in the other.

But in order to save on execution time, we must run our test on both the browsers in parallel.

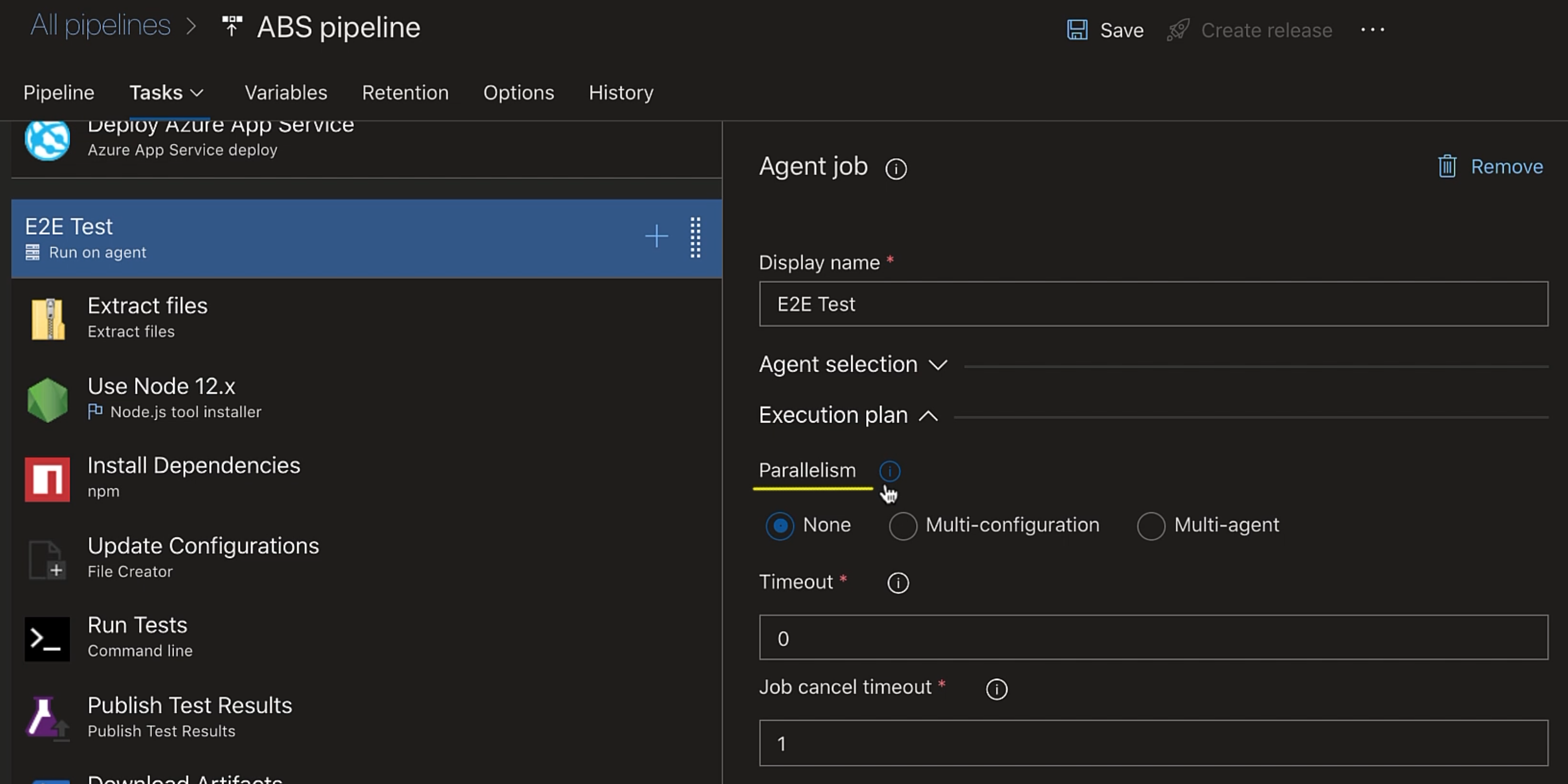

To do that, if we go to our agent job, we have a section "Execution Plan" and under it, we have "Parallelism".

Currently it is set to none.

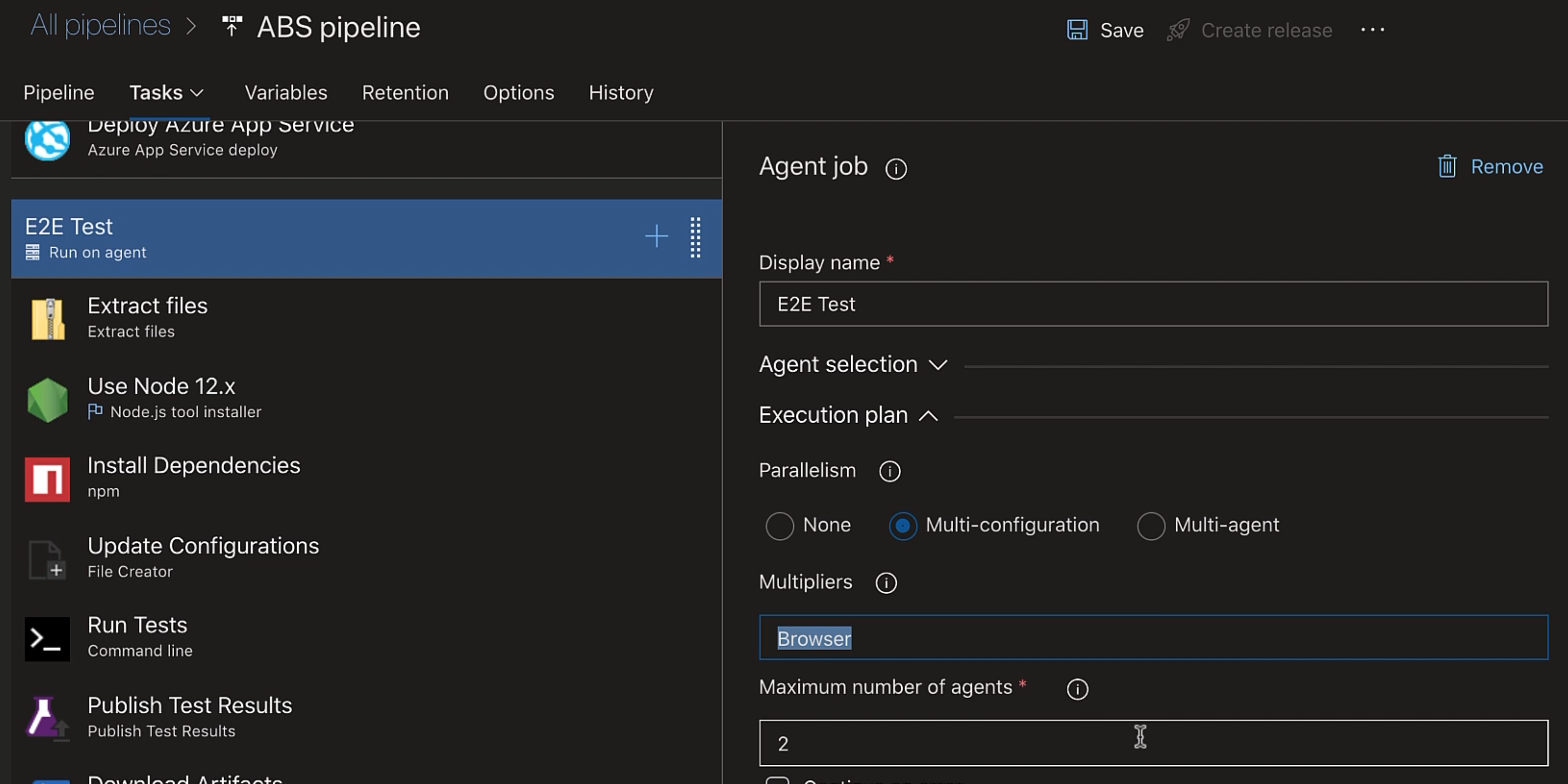

We will instead set it to "Multi-configuration", which will run the same set of tasks on multiple agents, but with a different configuration on each agent.

We need to specify a multiplier.

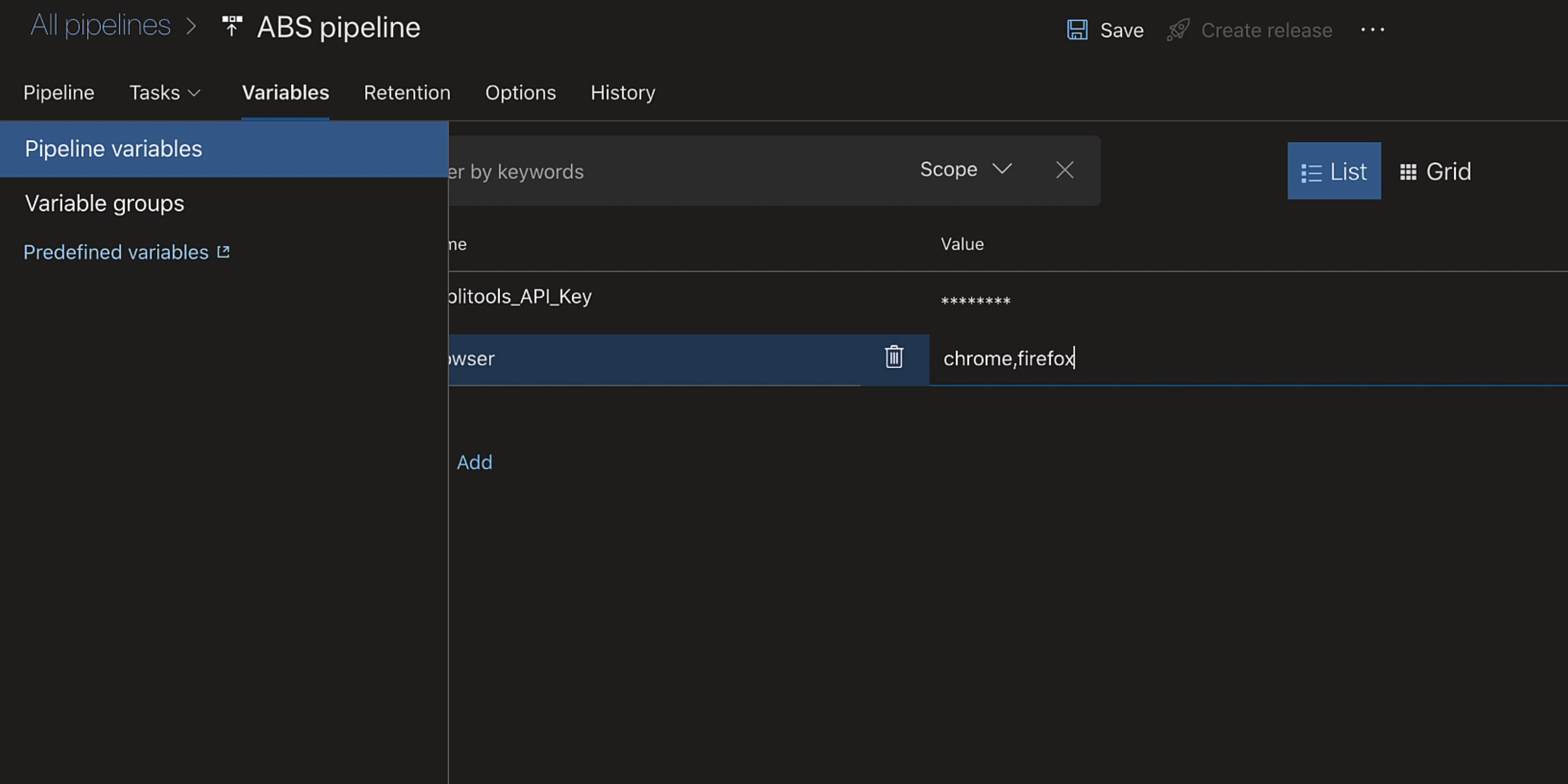

"Multipliers" is a configuration variable, so "Browser" is just a variable name.

We can have multiple configuration variables separated by commas.

We'll set the number of agents to "2", because we would like to run on two different browsers.

So we need two agents - one for each browser type.

Let's create a configuration variable "Browser".

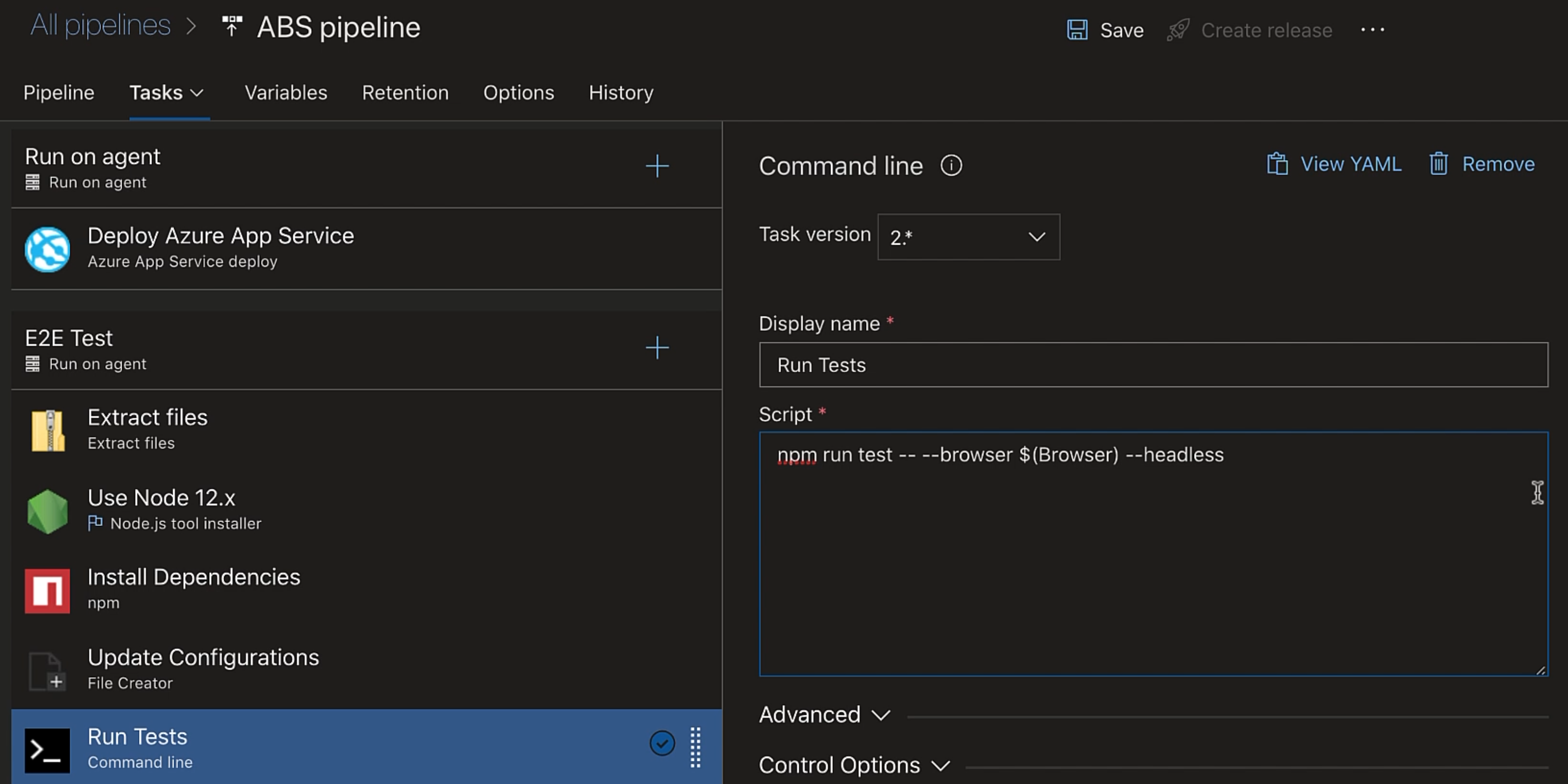

Back to our task.

Now here we'll add --browser.

Browser is a variable, and so we have wrapped it in $().

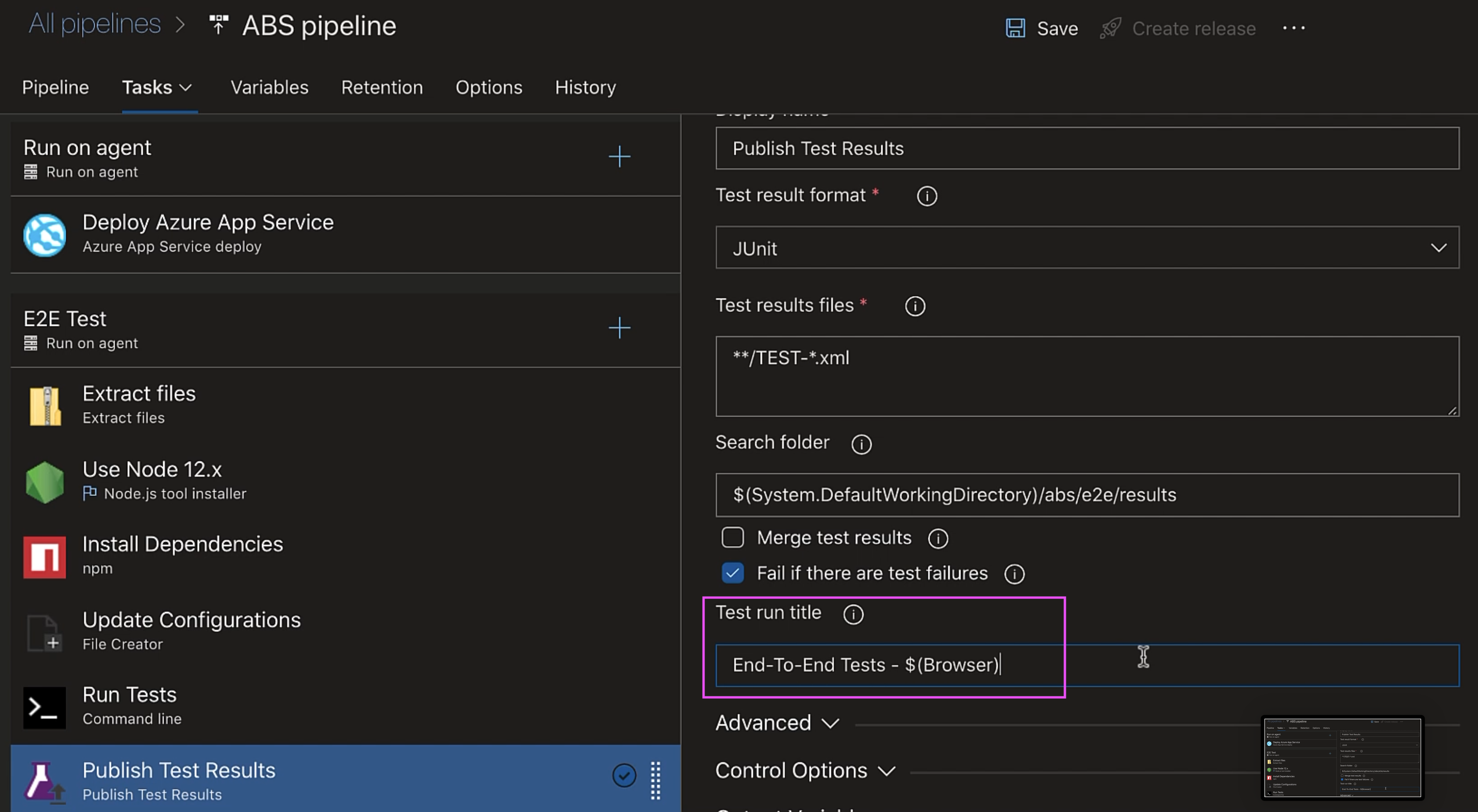

Also, in our "Published Test Results" task, we'll update the "Test run title" to have "$(Browser)", and that's it.

Let's create a release.

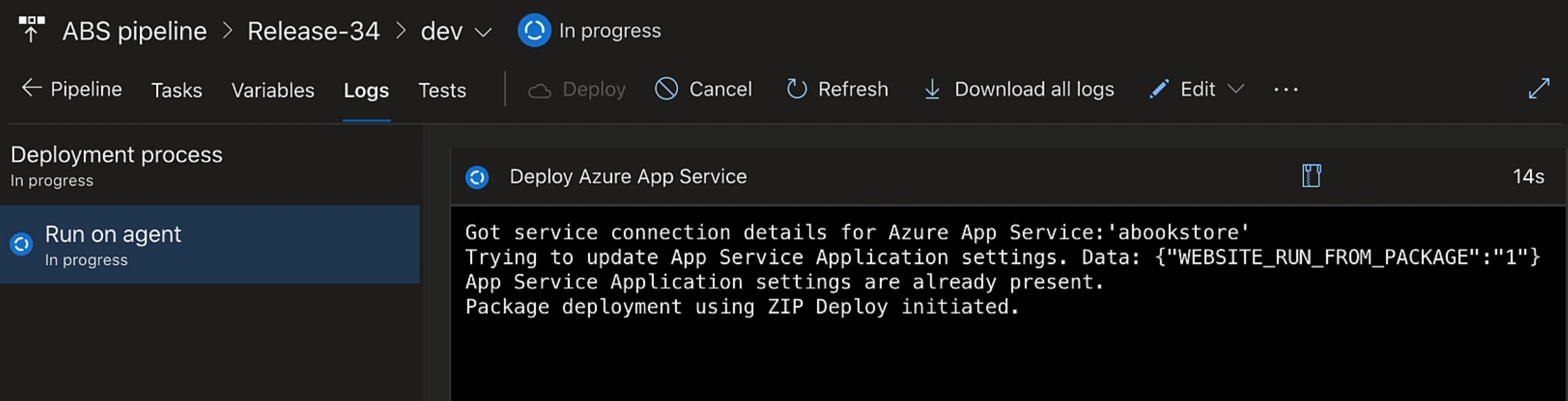

It's currently deploying our app.

Now it will run our test.

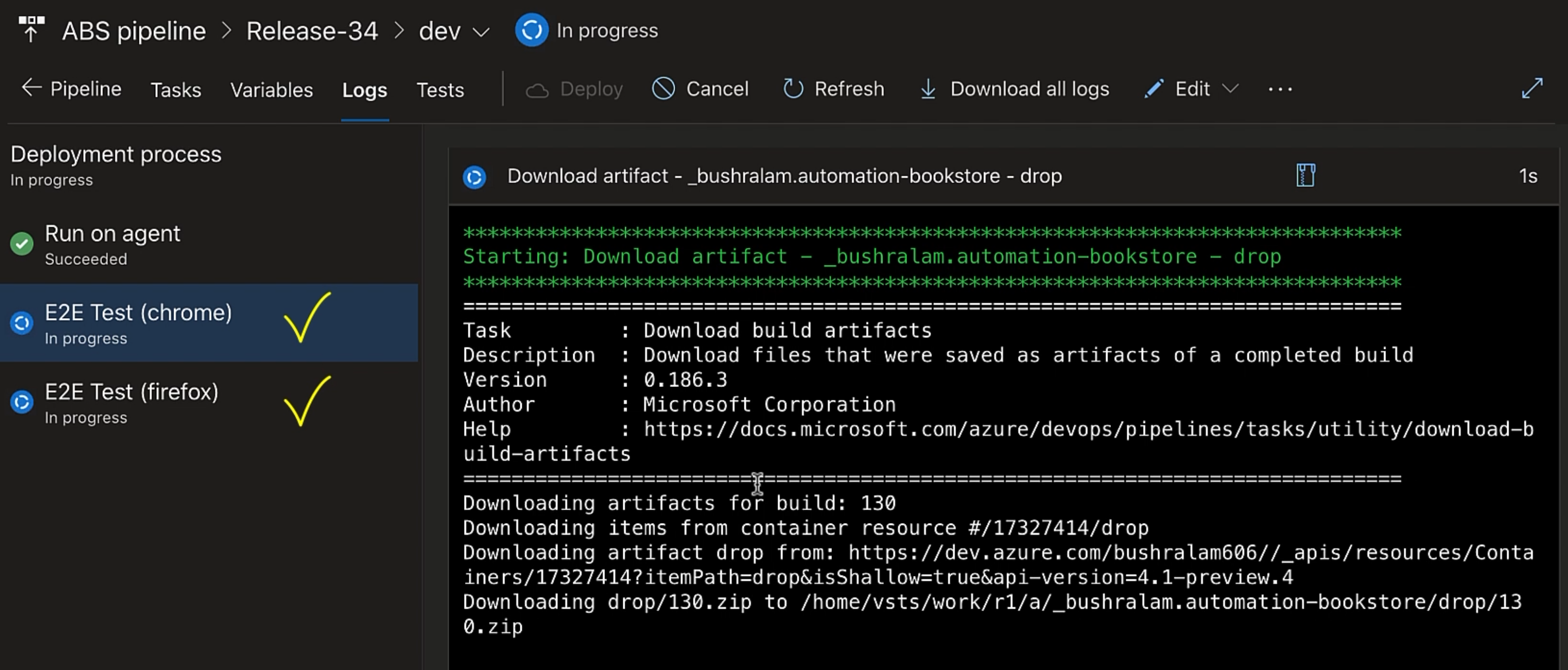

Here, look, we have two agents running in parallel - one for Firefox and the other for Chrome.

So our tests are running in parallel on both the browsers.

That is such a time-saver when you have long-running test suites.

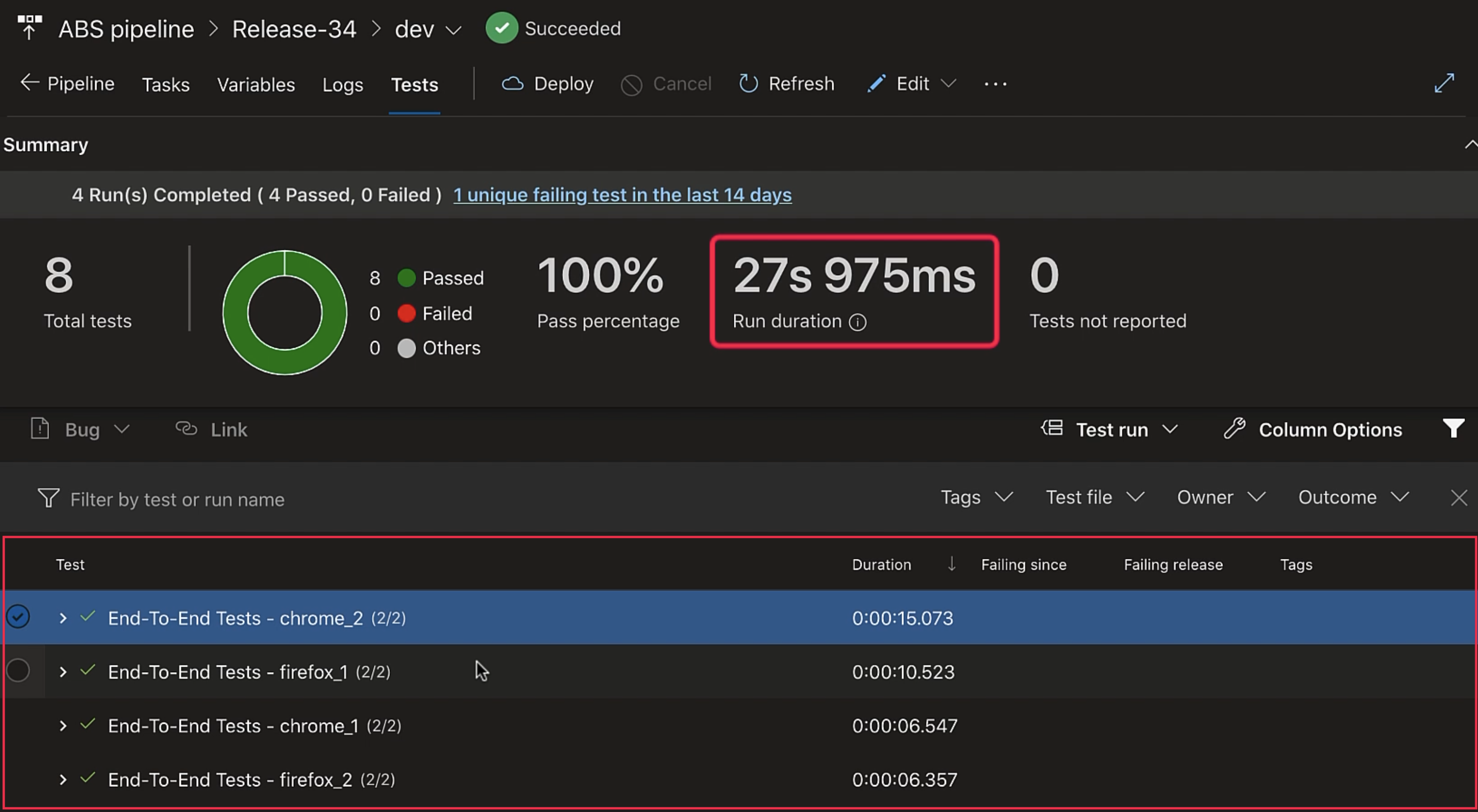

Now here you have four test runs because we had two test files and both of them ran for Chrome and Firefox.

This "Run Duration" here is a little misleading because this is actually the sum total of all the run durations, but our tests ran in parallel, and so we were able to run in less than this time.

I hope you enjoyed running your tests in parallel.