Transcripted Summary

In this video, we'll cover an example workflow that a machine learning project goes through.

Hopefully, you'll see that a lot of questions and a lot of testing happens throughout. And if you're already a tester, you could probably jump into an AI team right now and be immediately impactful and valuable.

In this chapter, we're going to be building a model to predict house prices so keep that in mind. For now, let's jump into the steps it takes to start an ML project.

# Steps for Building a Machine Learning Model

Like many projects, we'd want to start by defining the problem.

Some questions that can help here would be things like: what are we trying to solve, who is the ML system for, and especially, does this even need machine learning?

Understanding and defining the why and the problem statement is important before investing more time and energy. Machine learning might be overkill for what you're trying to do.

Once you have the problem statement, you want to come up with which metrics the team will use to measure success and failure.

Sure, solving the problem would be a success, but there is more to quality than functional correctness.

You will want to define initial baselines, consider privacy and security risks, and create a proof of concept, even with a simple model, to help you evaluate and assess risks and dependencies.

Also, questions like, "Do we currently have the proper resources to do this?" are effective in identifying risk.

For example, I'm not an expert in housing prices or the housing market. We'd probably want to have experts on our team so we can solve the problem appropriately.

If I was working on a natural language processing problem, I may want linguists on my team, so we have that expertise.

We also need to have an initial architecture that we want the model to sit on top of.

For example, which databases or tables will we pull the data from?

What does our ELT process or data pipelines look like?

Will our model be behind a REST service or on a mobile device?

How will we monitor and measure the performance of our model?

You don't have to design a perfect architecture, but you should consider questions like these because they will matter as you deploy, observe, and operate your models in production.

With all that initial design out of the way, now we can get to the start of actually building a model.

To train models, we need data, so data collection is naturally the next step.

But you don't just snap your fingers, do your step, and do it all by yourself. Let me see you do it. No.

There's more to it.

Where is the data coming from? Is this something that you have to build, or is the data already being streamed in from somewhere?

Is this different than our lower environments like STAGE or DEV? Are you just going to copy data from production and put it into staging and DEV?

Where are we storing the data? And what are the storage and compute costs associated? Some databases are cheap to store but expensive to query.

Is the data streamed and/or batched in some way? You're probably not querying your data lakes directly so what does that look like?

Eventually, this step gets automated into what we call data pipelines. Understanding the journey data takes through your pipelines and processes is super helpful, especially because you probably want to test that to too.

Once you have data coming in or a big enough dataset, you want to start training. However, there are many different types of models and architectures. Also, the data may not be in a state that is ready to train a model.

So, there is a data preparation step.

What shape or shapes does the data need to be in? If the model requires a triangle but the data comes in as a square, then you need to reshape it.

What are the data types? Should any be converted or normalized to something else?

Are there missing values or errors that we need to fill or solve?

Data is the name of the game so proper analysis, preparation, and testing are crucial. If you train and validate the models with poopy data, you'll get poopy models, and nobody wants that.

Finally, the data is ready to train and validate a model.

Usually, you'll experiment and compare multiple different models to see which is best.

You capture training and validation, logs, and metrics. You visualize these results for further analysis. You tune weights and parameters to get better results and prevent problems like overfitting.

And you use tests, exploratory and automated, to help.

A lot of experimentation, analysis, and validation happens here. It's common to repeat the data collection, the preparation, and training steps over and over and over again until you find the model or models that solve the problem best.

The next step is testing the models, but this is different than the previous train and validation step.

It's misleading because testing happens in all of the steps and is more continuous than happening sequentially, but this does deserve a special shout-out.

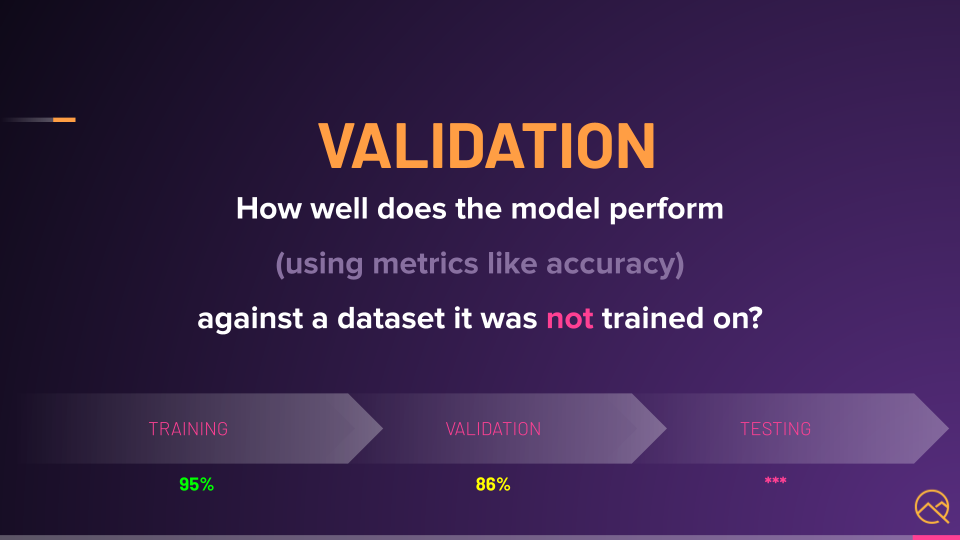

Validation asks the question, "How well does the model perform, using metrics like accuracy, against a dataset it was not trained on?"

For example, how accurate is our model at predicting house prices?

Against the training dataset that the model sees over and over again, it might have a 95% accuracy, which looks pretty good.

But against a validation dataset that the model has not seen before, it might have a much lower accuracy like 86%, suggesting that the model is overfitting and we got to continue tuning and experimenting.

Testing in this sense goes beyond just validating these metrics.

As testers, we'd to consider more than just accuracy.

For example, what behaviors does the model show? Does it demonstrate harmful biases?

Does it meet our privacy and security requirements? Is it performant and reliable?

Can it withstand things like adversarial attacks? And of course, one of our favorite questions: will the customer have a good experience using our model?

You'll see some of these in action in the rest of the course.

Now that we have a model or models that look and feel good, we want to deploy them for use and further testing.

This is where it's good to know how this will be hosted.

- Will this be a REST or GraphQL service? If so, we can use API testing tools and techniques.

- Will this be on a mobile device? If so, then which devices should we test on?

- Will this be in an airplane or self-driving car?

- Or maybe a serverless function?

No matter what, these integrations and flows need to be tested.

And lastly, we have observe and iterate.

Observability and operability matter here.

Things like you want to monitor, you want to measure, learn, alerts, insights, you get the idea. All that good stuff, just like for other applications and services, we'd want for our ML systems too.

This would feed right back into our collect data step for more training or retraining and on and on we go.

My gosh, lots of talking there. Let's get into code so we can do some training and testing on a real model, that'll be better than looking at any more bullet points on a slide.

Quiz

The quiz for this chapter can be found in Chapter 2.2.