Transcripted Summary

Let's now move from general Telemetry into the more specific topic of Observability.

When defining observability, there are some sharp edges that we need to be aware of.

For example, earlier we spoke about the most likely obvious definition of observability as the ability to observe what is going on in your system.

This can be thought to mean that observability is just a rebranding of monitoring, and there are some truths here.

If monitoring is defined as observing, recording, and detecting with instrumentation, then it even has the word observe in the definition.

And of course, a large component of observability is the recording and detecting of useful telemetry to observe the system's behavior.

So what does a world with monitoring before observability look like?

# A World With Only Monitoring

It is common to use this monitoring-focused telemetry to create live dashboards and automated alerts.

A combination of these helps us know when the current system behavior deviates from our expected - either too much or for too long.

It is likely that this type of monitoring has helped you identify possibly connected systems when they arise as well.

We can see that when there's been a rise in response latency, we also see a rise in CPU.

Or, for test automation, when we see that there's been a rise in slower CI pipelines, there's also been a larger number of failing tests.

These types of insights are fantastic and valuable.

They can bring peace of mind to a team that has not had that level of visibility before.

But this also tends to encourage the team to build a single pane of glass, which they can then use to identify the health or degradation of their system.

And with successful monitoring teams, as they build this sense of awareness and confidence with this single pane of glass, they also unfortunately build a level of idleness, because they think that they have a view into their system.

So what happens when these dashboards are all green, but you also are still having angry customers call?

How are you going to address that?

Another example could be from test automation.

How do you feel when all your tests are passing, but users are calling into customer support at an alarming rate after a deploy?

This is the thing about monitoring and test automation.

They can only tell us about scenarios we already know about, and that we have predetermined.

And in a situation when everything appears healthy on our monitors or in our continuous integration pipelines, and yet there's still clearly an issue, we can end up confused and unsure where to start investigating.

# Monitoring vs Observability

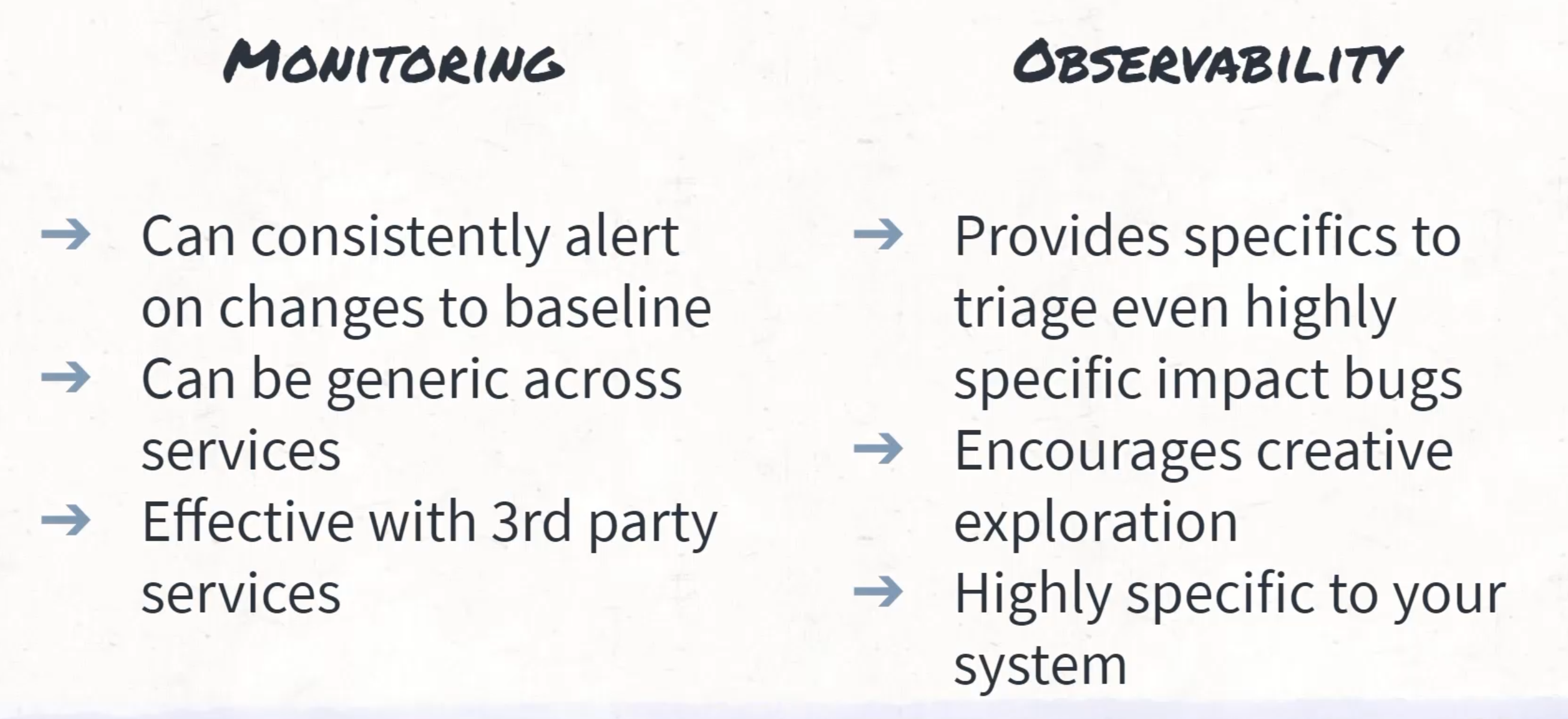

With monitoring, we have a way to track, identify and lock in expected behavior across a wide diversity of systems and we can do this in a fairly standard way.

In addition, monitoring lends itself to automated alerts if any system expectations are breached.

In contrast, observability is what the people creating the system and that are on the receiving side of that alert need to be effective at their jobs.

Observability targets supporting the unknown.

An observable system describes a system that can be poked and prodded in order to highlight and uncover key details about its behavior.

And more importantly, an observable system is one that can answer new, unique, and complex questions without delay.

But so far, this explanation of observability likely sounds either very abstract or very fairytale-like, so let's go back to the beginning and see where this term came from.

Wikipedia defines observability as the "measure of how well internal states of a system can be inferred from knowledge of its external outputs".

This definition actually comes from an engineering discipline called "control theory".

And while it's clear enough in principle, it can be quite difficult to apply when getting started.

I found that there were two key takeaways that helped me get going.

First, this definition uses the phrase "measure of how well."

This description paints a picture of measuring observability as a scale of success, rather than as an on and off measure.

Second, the definition speaks to understanding of the system only from external outputs.

This encourages the use of telemetry, which can feel really odd for developers and test automators, because we spend all of our days using IDE tools like debuggers and reading lines of code to understand what is happening as we develop new code on our local laptops.

With all this easy access to tools like debug and REPLs, we sometimes neglect the tools associated with debugging in more remote environments - tools like telemetry.

So what is a remote environment?

Production is the quintessential remote environment.

In production, we should never have direct control, and we may even have limited visibility of what's going on.

But it's not just production that's remote.

Other environments like dev or continuous integration may be more accessible, but they are still not as easy to manipulate as local environments.

But as we know, these limitations are in place for a good reason.

They are often due to a mix of security and collaboration requirements.

So to wrap up this journey into the definition of observability, I think Sean McCormick's definition from Lightstep really showcases why observability became so popular recently.

And that's because observability is "not just knowing a problem is happening, but knowing why it's happening and how I can go in and fix it".

With our complex systems growing every day, this ability to explore and understand even nuanced changes and issues is something that has given developers a huge amount of confidence and opportunity.

# Why Observability for Test Automators

Why does this matter to you as a test automator?

Well, as a test automator, there's a huge focus on understanding the behavior of our tests to root out that flakiness.

In addition, we need to understand the behavior of our entire suite, and even more widely, our delivery pipelines, to be ruthlessly efficient in our runtime, while still delivering all necessary validations.

Just knowing that the pipeline takes a long time, or just knowing that some tests sometimes fail, is not enough.

We need to generate the type of data that can help us systematically improve our systems.

While this chapter may feel abstract and most likely slightly uncomfortable, rest assured, the remainder of this course will be about applying this theory.

More specifically, we will be implementing some monitoring and some observability techniques so that you can explore the difference on your own time.