Transcripted Summary

# Fixture / Test Metadata Overview

TestCafe allows you to specify additional information for tests in the form of key-value metadata.

You can display this information in the reports and use them to filter tests. For example, the following command runs tests whose metadata's device property is set to mobile, and env property is set to production.

testcafe chrome my-tests --test-meta device=mobile,env=production

For example, here, we can add this parameter - or this value - --test-meta and after that pass device with value and env with a value. This means that this is test metadata.

We can also define metadata for fixture.meta or test.meta methods.

For example, on the fixture level, we can add meta and we can add, for example, fixtureID or ticketID or taskID, and then adding the value here.

fixture `My fixture`

.meta('fixtureID', 'f-0001')

Also, we can add the author for the test with the name and we can add the creation date, for example.

fixture `My fixture`

.meta('fixtureID', 'f-0001')

.meta({ author: 'John', creationDate: '05/03/2018' });

Also, for the test, we can add test.meta with different values or different keys. For example, testID and severity.

After that, we can start writing our tests.

When we need to run this specified data with metadata, we can add --test-meta in the schema and then add the values that we are passing here in fixture.meta or test.meta.

# Fixture / Test Metadata Demo

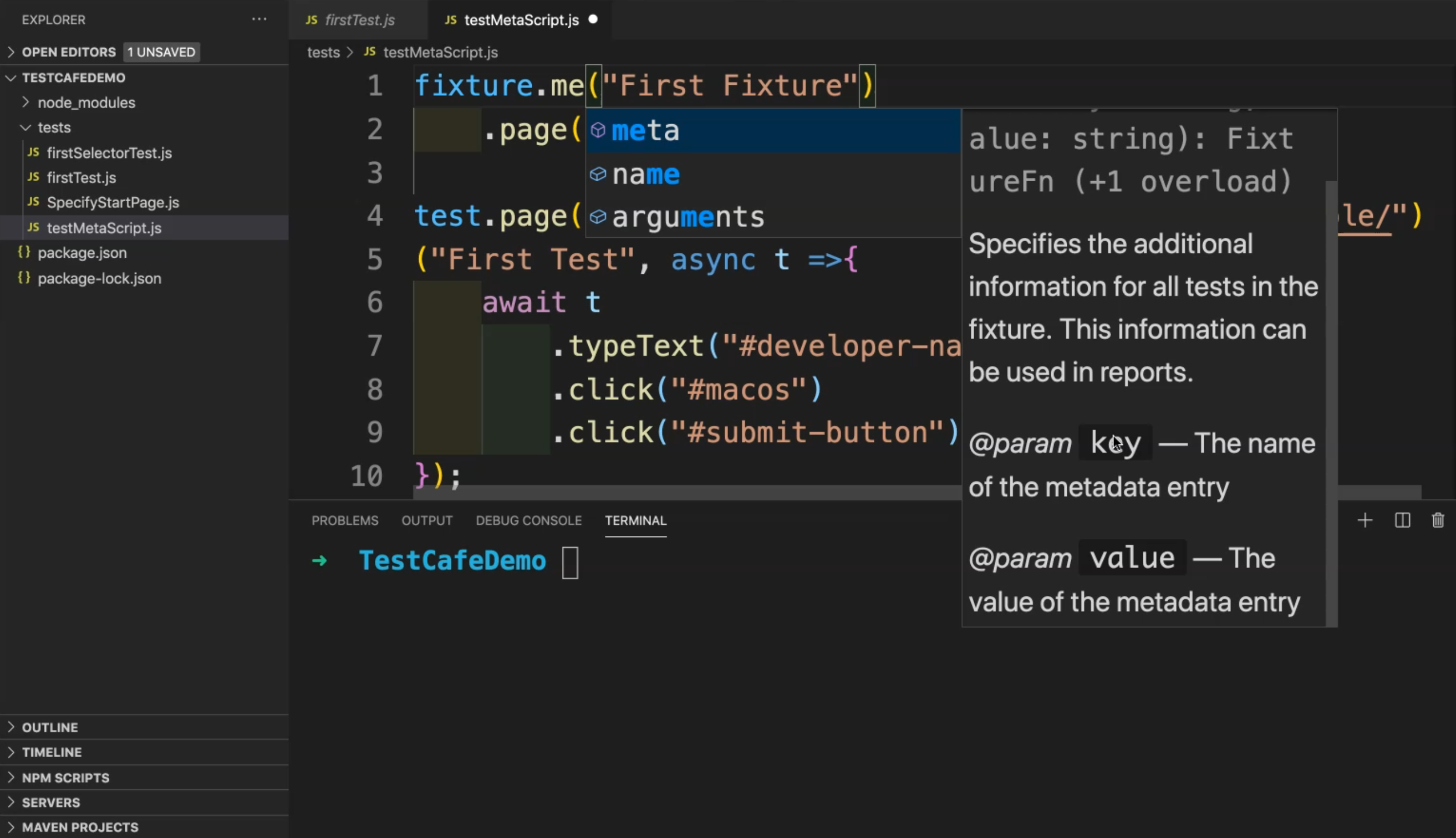

In this demo, we will learn how to use test metadata with fixture and with test. For example, in the first test, we can copy the file firstTest.js and rename it testMetaScript.js.

We have two types of metadata - one with the fixture and one with the test.

Here in the fixture, we can add .meta(), which specifies the additional information for all the tests in the fixture. This information can be used in reports.

Here we have a key and value. After that, we can run with specific metadata with fixture or also with test. For example, here with meta, we can add something like the version. For example, this is a fixture version and the version number is 1, for example.

fixture.meta('version','1')("First Fixture")

.page("http://devexpress.github.io/testcafe/");

We can also add .meta to the test. For example, we can add environment - this test will run in a specific environment and the environment will be production. You can add any specific data - for example - the author, the severity of the test case, anything related to the test version.

fixture.meta('version','1')("First Fixture")

.page("http://devexpress.github.io/testcafe/");

test.meta('env','production')

.page("https://devexpress.github.io/testcafe/example/")

("First Test", async t =>{

await t

.typeText("#developer-name","TAU")

.click("#macos")

.click("#submit-button");

});

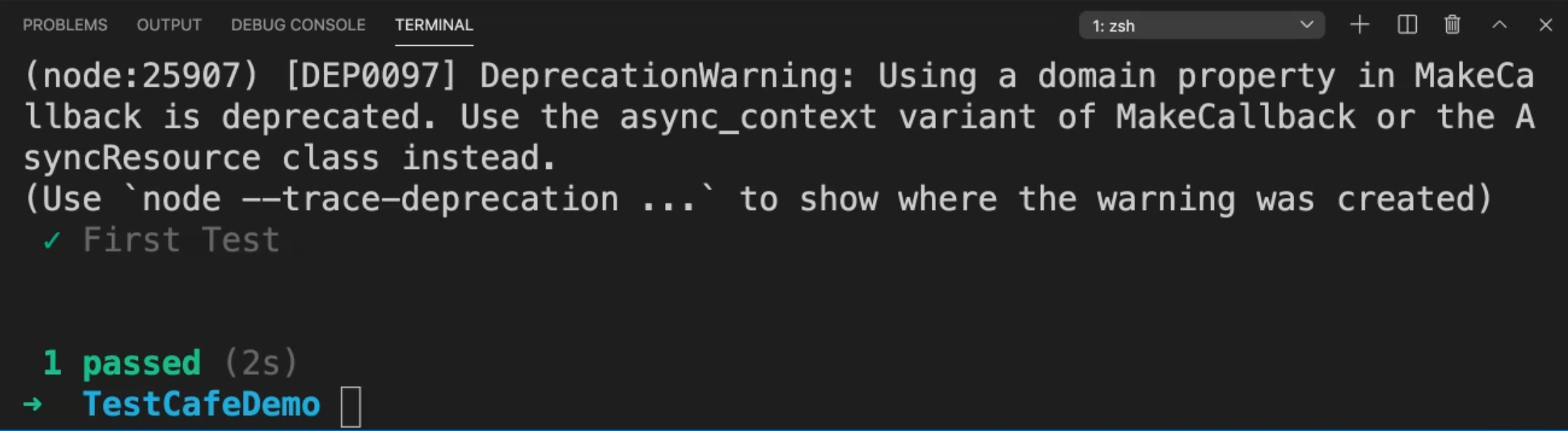

After that, with the test run, you can run it with specific metadata. For example, here, to run our test, we can write:

testcafe chrome tests/testMetaScript.js --test-meta env=production

We can run our test and then check the result. Testcafe will run or open the local browser and then we will redirect to that URL and then start our test.

In our case, we have only one test and it's usually run.

What if we add another test without metadata and then check the result after that? For example, we can copy this test and paste it below without metadata.

.page("http://devexpress.github.io/testcafe/");

test.meta('env','production')

.page("https://devexpress.github.io/testcafe/example/")

("First Test", async t =>{

await t

.typeText("#developer-name","TAU")

.click("#macos")

.click("#submit-button");

});

test

.page("https://devexpress.github.io/testcafe/example/")

("Second Test", async t =>{

await t

.typeText("#developer-name","TAU")

.click("#macos")

.click("#submit-button");

});

This is a second test and we just need to change the name and it will do the same script. This second test is without meta and this first test is with metadata.

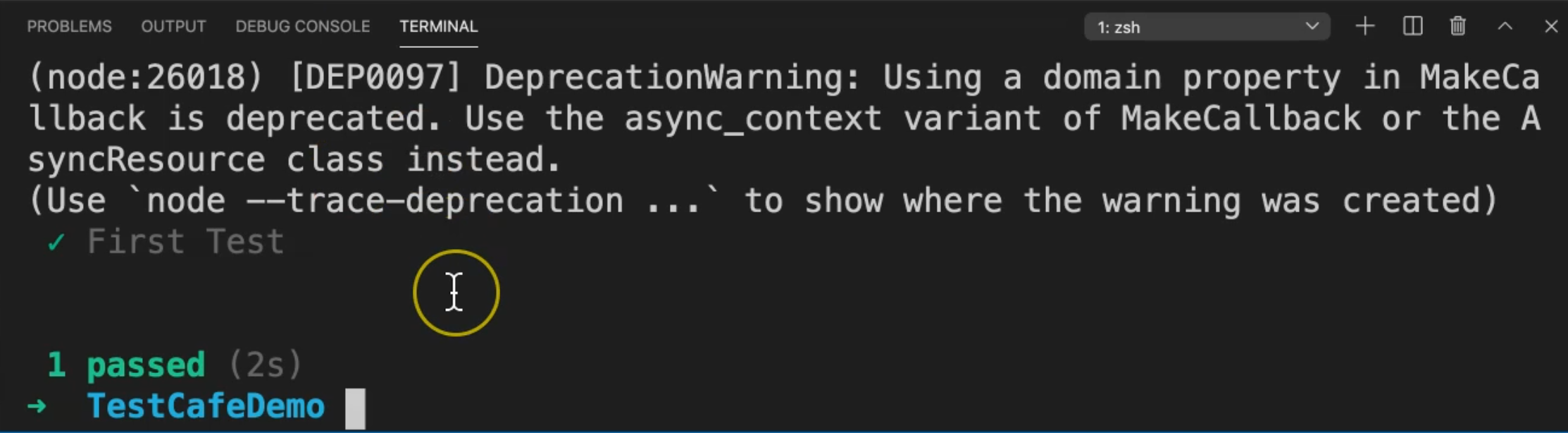

After that, we will repeat our test. Here we have two test cases, but we need to run only the --test-meta with the environment production. We will run it again and check the result.

It's opening the URL, and in the end, it's one test passed.

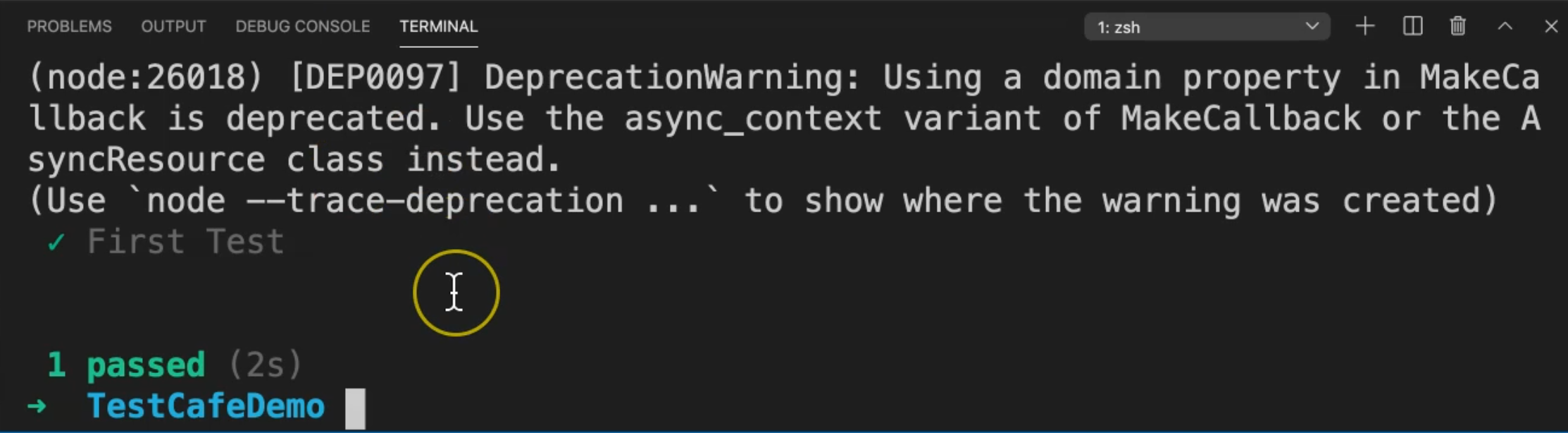

Now we will remove this --test-meta condition and try to run our file again to check if both test cases will run or not. We'll run it again and check the results.

We are redirected to the server. We ran two test cases, the first and second tests.

With metadata, we can just add --test-meta in our command line as a parameter and pass the value that you need to run. After that, we can filter the test with metadata.

Resources

Quiz

The quiz for this chapter can be found in 4.10